Data Pipeline

Data Pipeline?

A data pipeline is a means of moving data from one place (the source) to a destination (such as a data warehouse). Along the way, data is transformed and optimized, arriving in a state that can be analyzed and used to develop business insights.

A data pipeline essentially is the steps involved in aggregating, organizing, and moving data. Modern data pipelines automate many of the manual steps involved in transforming and optimizing continuous data loads. Typically, this includes loading raw data into a staging table for interim storage and then changing it before ultimately inserting it into the destination reporting tables.

Related Resources

Navigating the Data Landscape: A Deep Dive into Warehouses, Lakes, Meshes, and Fabrics

It’s your first day at “TechTonic Innovations,” a (fictional) startup that’s been making waves in the tech industry. As you enter their modern office, you’re greeted with smiles, handshakes, and the subtle hum of servers in the background. You’ve been brought in as the new Data Strategist, and you’re eager to dive into the heart […]

Read More

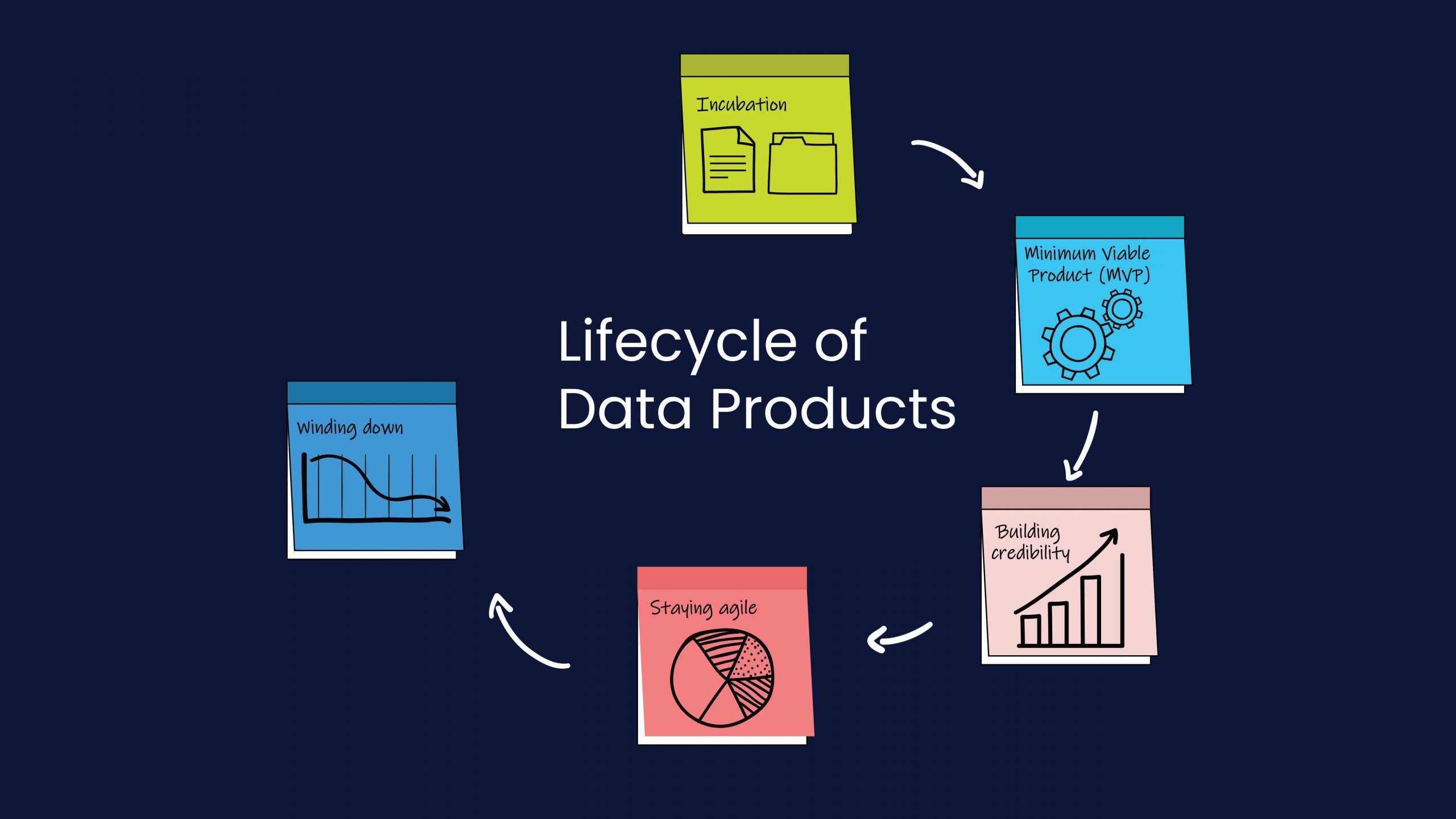

Data Product Lifecycle: Evolution and Best Practices

Data products have exploded in popularity over the last few years. As an industry, we are where the automobile industry was around the turn of the 20th century. We are slowly transitioning from building hand-crafted, exclusive products for Big Tech customers to widespread commoditization. Soon, efficiency, maintenance, standards, and assembly lines are going to be […]

Read More

Data Fabric: Unraveling the Future of Integrated Data Management

Scene 1: Picture waking up to the soft strumming of the acoustic guitar on Bon Iver’s “Holocene”, a song recommendation from Spotify based on your recent obsession with indie folk. Scene 2: As you sip your morning coffee, you scroll through your Amazon app, noticing a recommendation for a book on “Modern Folklore and Music.” […]

Read MoreStay updated on the latest and greatest at Scribble Data

Sign up to our newsletter and get exclusive access to our launches and updates