Overfitting and Underfitting

Overfitting and Underfitting in Machine Learning

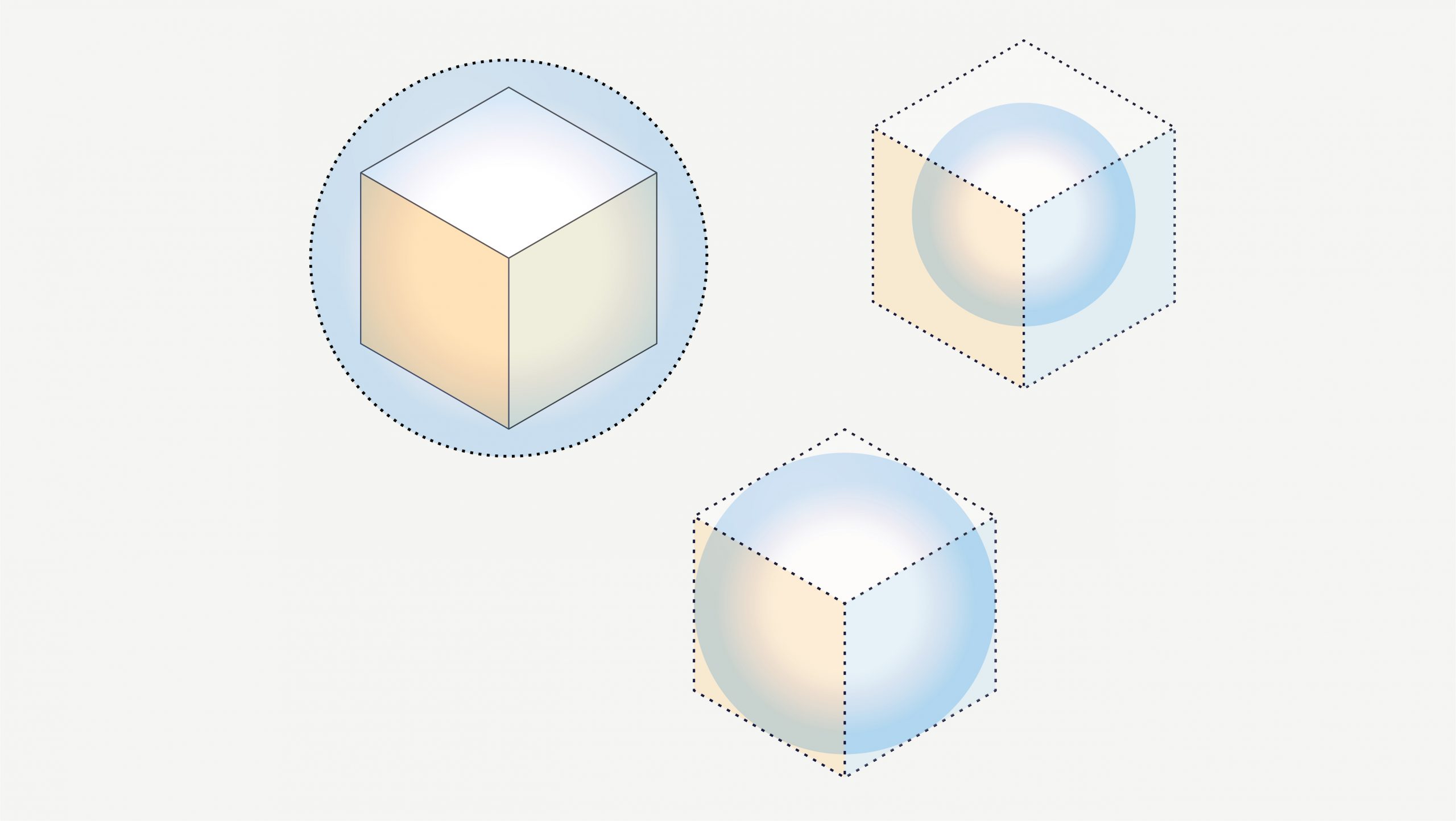

In machine learning, overfitting and underfitting are two common problems that can occur when building predictive models. Overfitting refers to a scenario where a model performs exceptionally well on the training data but fails to generalize well to new, unseen data. On the other hand, underfitting occurs when a model is too simple and fails to capture the underlying patterns and relationships in the data.

Overfitting can be a result of a model that is too complex or when it is trained for too long. It occurs when the model starts memorizing the noise in the training data rather than capturing the true underlying patterns. As a result, the model becomes too specific to the training data and fails to generalize well to new data. This can lead to poor performance when the model is deployed in real-world scenarios.

Underfitting, on the other hand, occurs when a model is too simple to capture the complexity of the data. It can happen when the model lacks sufficient capacity or when it is not trained for long enough. An underfit model fails to capture important patterns and relationships in the data, leading to poor performance on both the training and test data.

To address overfitting, several techniques can be employed. One common approach is to use regularization techniques such as L1 or L2 regularization, which add a penalty term to the loss function. This encourages the model to have smaller weights and reduces its complexity. Another technique is early stopping, where training is stopped before the model starts overfitting.

To address underfitting, one can consider increasing the complexity of the model. This can be achieved by adding more features or using more advanced algorithms. Additionally, increasing the amount of training data or training for longer periods can also help mitigate underfitting.

In summary, overfitting and underfitting are common challenges in machine learning. Both can lead to poor performance of predictive models. Understanding these concepts and employing appropriate techniques can help improve model performance and generalization capability.

Related Article: https://www.scribbledata.io/overfitting-and-underfitting-in-ml-introduction-techniques-and-future/

Related Resources

Overfitting and Underfitting in ML: Introduction, Techniques, and Future

In 2016, the tech world was all ears and eyes. Microsoft was gearing up to introduce Tay, an AI chatbot designed to chit-chat and learn from users on Twitter. The hype was real: this was supposed to be a glimpse into the future where AI and humans would be best buddies. But, in a plot […]

Read More

Mastering Generative AI: A comprehensive guide

The year was 2018. Art enthusiasts, collectors, and critics from around the world gathered at Christie’s, one of the most prestigious auction houses. The spotlight was on a unique portrait titled “Edmond de Belamy.” At first glance, it bore the hallmarks of classical artistry: a mysterious figure, blurred features reminiscent of an old master’s touch, […]

Read More

2023: A Critical Year for ML’s Rapid Growth

As 2022 draws to a close, it is time to reflect on the year gone by and welcome 2023! I’d like to take this opportunity to talk about some of the highs, the lows, the opportunities and learnings in 2022, how we’ve seen the market evolving, how it’s impacted some of the choices we’ve made […]

Read MoreStay updated on the latest and greatest at Scribble Data

Sign up to our newsletter and get exclusive access to our launches and updates