The year was 2018. Art enthusiasts, collectors, and critics from around the world gathered at Christie’s, one of the most prestigious auction houses. The spotlight was on a unique portrait titled “Edmond de Belamy.” At first glance, it bore the hallmarks of classical artistry: a mysterious figure, blurred features reminiscent of an old master’s touch, and an aura that seemed to whisper tales from centuries past. The room buzzed with speculation. Who was the forgotten master behind this piece?

But as the gavel struck, sealing a final bid of a staggering $432,500, the truth emerged. The creator of this artwork wasn’t an artist from the annals of history. It wasn’t even human. “Edmond de Belamy” was the brainchild of a Generative Adversarial Network, a cutting-edge AI model. This revelation sent ripples across the art community, challenging long-held notions of creativity, originality, and artistic expression.

This event wasn’t just an anomaly; it was a harbinger of things to come. We are on the brink of a transformative era where algorithms are not mere tools but active participants in the creative process. As we embark on this exploration, we’ll journey through the evolution, capabilities, and potential of generative AI. From its mathematical underpinnings to its real-world applications, we’ll uncover how this technology is reshaping industries and redefining the boundaries of human and machine collaboration.

Historical Evolution of Generative AI

Ref: Freepik

Rewind to the mid-20th century, when a brilliant mind named Alan Turing pondered a profound question: “Can machines think?” This inquiry, seemingly simple yet deeply philosophical, set the stage for the birth of artificial intelligence. Turing’s musings laid the foundational stones upon which the edifice of AI would be built.

As decades passed, the dream of machines that could emulate human-like thinking began to take shape with the advent of neural networks in the 1980s and 1990s. These brain-inspired models promised a future where machines could learn, think, and even create. However the true potential of neural networks remained dormant until the 2000s when a combination of data abundance and computational power breathed life into them.

Enter the era of Generative Adversarial Networks (GANs) in 2014. Introduced by Ian Goodfellow, GANs had the magical ability to conjure realistic images from random noise. The art world, always looking for the avant-garde, was quick to embrace this new medium, leading to groundbreaking creations like “Edmond de Belamy.”

Parallelly, the realm of text witnessed its own revolution. Transformer architectures and models like OpenAI’s GPT series emerged, wielding the power to craft coherent narratives, almost indistinguishable from human-written prose.

Today, as we stand at the crossroads of art and technology, models like OpenAI’s DALL·E and Midjourney are pushing the boundaries of what generative AI can achieve, turning textual descriptions into vivid visual masterpieces.

Foundational Concepts of Generative AI

At the heart of the generative AI revolution lies a set of foundational concepts that underpin its very essence. To truly grasp the power and potential of generative AI, it’s crucial to understand these bedrock principles.

Ref: Freepik

The term “generative” in the realm of artificial intelligence refers to the ability of a model to generate new, previously unseen data that mirrors the characteristics of the training data. Imagine an artist who, after studying countless landscapes, can paint a new scene that, while unique, captures the essence of the landscapes they’ve observed. Similarly, a generative model learns patterns, structures, and nuances from its training data and then crafts new data points that fit within that learned framework.

Machine learning models can be broadly categorized into two types: discriminative and generative. Discriminative models, as the name suggests, focus on discrimination or classification. They learn the boundaries between different classes and aim to correctly label new data points. On the other hand, generative models are concerned with understanding how the data is generated. They capture the underlying data distribution, enabling them not just to classify but to create new data points that feel authentic.

The Mathematical Backbone:

Generative models operate on the principles of probability theory and statistics, aiming to capture the underlying structure of the data they’re trained on. Here’s a more detailed breakdown:

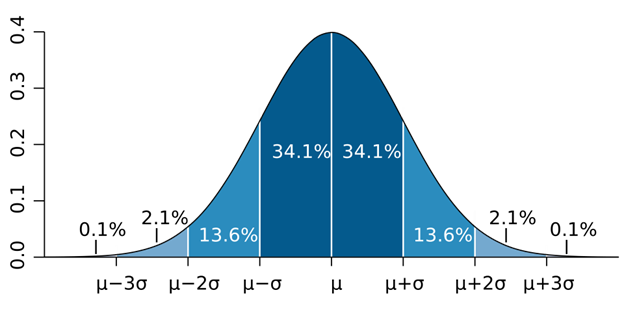

- Probability Distributions

Ref: Wikipedia

At the core of generative models is the concept of probability distribution. This mathematical function describes the likelihood of obtaining the possible values that a random variable can take. In simpler terms, it tells us how data points in a dataset are spread out and how frequently they occur. For generative models, understanding the probability distribution of the training data is crucial because it allows the model to generate new data points that are consistent with this distribution.

- Maximum Likelihood Estimation (MLE): MLE is a method used to estimate the parameters of a statistical model. In the context of generative AI, it’s used to find the parameters that make the observed data most probable under the assumed model. Imagine you have a set of data points, and you’re trying to fit a curve that best represents them. MLE helps in adjusting the curve in such a way that the overall likelihood of observing those data points is maximized.

- Latent Variables and Hidden Structures: Generative models often deal with latent variables, which are variables that are not directly observed but are inferred from the observed data. These latent variables capture hidden structures in the data. For instance, in a dataset of images of faces, latent variables might capture aspects like facial expressions, lighting conditions, or angles, even if these aren’t explicitly labeled.

- Bayesian Inference: Bayesian inference is a method of statistical inference in which Bayes’ theorem is used to update the probability estimate for a hypothesis as more evidence or information becomes available. Generative models, especially those like Variational Autoencoders (VAEs), use Bayesian inference to optimize the model parameters and make predictions about unseen data.

- Sampling Techniques: Once a generative model has learned the probability distribution of its training data, it can generate new data points by sampling from this distribution. Techniques like Monte Carlo sampling or Markov Chain Monte Carlo (MCMC) are often employed to draw these samples, ensuring that the generated data points are representative of the true data distribution.

In essence, the mathematical foundation of generative models is a blend of probability theory, statistical inference, and optimization techniques.

Key Generative Techniques and Their Evolution

ref: Freepik

Generative Adversarial Networks, or GANs, have revolutionized the world of generative AI. At their core, GANs consist of a dueling pair: the Generator, which crafts images, and the Discriminator, which evaluates them. They engage in a continuous feedback loop until the Generator crafts an image so convincing that the Discriminator can’t tell it apart from a real one. This technique has enabled the creation of realistic video game environments, innovative fashion designs, and even the faces of non-existent people, as showcased on sites like thispersondoesnotexist.com.

Over time, GANs have evolved, giving rise to variations like DCGANs, which are optimized for images,, CycleGANs for style transfers, and BigGANs that produce high-resolution visuals.

Variational Autoencoders (VAEs) offer a different approach. They compress data into a lower-dimensional form and then reconstruct it, introducing a touch of randomness in the process. This unique capability makes VAEs particularly adept at tasks where slight variations are beneficial, such as in facial recognition systems, where they generate varied facial features to aid in more accurate identification.

Ref: Freepik

Recurrent Neural Networks (RNNs) stand out in their ability to recognize and generate sequences. This makes them invaluable for tasks that involve a series of data points, like time series analysis. Their real-world applications are vast, from powering chatbots that generate human-like responses to creating new music compositions in apps.

Diffusion models, on the other hand, operate by progressively adding noise to data and then attempting to reconstruct the original from this noisy version. Their strength lies in producing high-quality results, especially with images. In the medical field, for instance, they’ve shown promise by generating clear images from noisy MRI scans, aiding in more precise diagnoses.

Recently, Transformer Networks have emerged as a force to reckon with, especially in tasks requiring a deep understanding of context. Their self-attention mechanism allows them to weigh the importance of different data points in a sequence, making them exceptionally good at tasks like language processing. OpenAI’s GPT, a language model capable of answering questions and crafting poetry, stands as a testament to the power of transformer architecture. The self-attention mechanism, combined with positional encodings, ensures that the sequence and context of data are always maintained in the outputs.

As we delve deeper into the world of generative AI, it becomes evident that these techniques, with their myriad applications, are not just shaping our technological landscape but also influencing the very fabric of our daily lives.

Applications of Generative AI:

Ref: Freepik

Art and Creativity:

Models like DALL·E by OpenAI have showcased the ability to generate novel art pieces by interpreting textual prompts. Similarly, Jukebox, another model by OpenAI, can compose music in various styles, emulating famous artists or even creating entirely new compositions.

Scientific and Industrial Applications:

In drug discovery, models like AtomNet have been used to predict bioactive molecules, speeding up the drug development process. For material design, generative models help predict the properties of new materials, aiding in the discovery of innovative compounds.

Entertainment and Media:

Face replacement technology, powered by GANs, has been used in movies and media to create realistic video content. Game developers are employing models like Promethean AI to automate environment creation, enhancing the realism and detail of virtual worlds.

Audio:

WaveGAN is an example of a model that can generate audio clips. It’s been used to produce sound effects and even short music clips. Descript’s “Overdub” feature uses generative models to create realistic voice recordings from text inputs.

Visual:

NVIDIA’s StyleGAN has produced high-resolution images, from portraits to landscapes. Its ability to modify and generate images has applications in design, advertising, and film production.

Ref: Freepik

Synthetic Data:

GANs are often used to produce synthetic datasets, especially in domains where data collection is challenging. For instance, in medical imaging, synthetic data can augment real datasets, aiding in training more robust diagnostic models.

Information Extraction:

BERT, a transformer-based model, has been employed for information retrieval from complex documents. Its ability to understand context makes extracting relevant details from vast datasets invaluable.

Developer Assistance:

GitHub’s Copilot, powered by OpenAI’s Codex, assists developers by suggesting code snippets, automating repetitive tasks, and even offering solutions to complex coding challenges.

Challenges and Criticisms of Generative AI

Ref: Freepik

Generative AI, while revolutionary, is not without its challenges and criticisms. As we delve deeper into the world of generative models and their applications, it’s essential to address the hurdles they face and the concerns they raise.

- Technical Challenges:

Generative models, especially GANs, often grapple with issues like mode collapse, where the model generates a limited variety of outputs. Training instability is another challenge, where slight changes in the model or data can lead to vastly different results. Overfitting, where the model becomes too attuned to the training data and fails to generalize, is also a common concern. - Ethical Considerations:

The rise of deepfakes powered by GANs has raised significant ethical concerns. These hyper-realistic fake videos can be misused for misinformation, leading to personal and societal harm. Additionally, as generative models produce content, copyright issues arise. Who owns the rights to AI-generated music or art? Is it the developer, the user, or the AI itself? - Originality and Authenticity:

With AI models like DALL·E creating art or jukebox-producing music, a debate rages about originality. Can a machine be truly original, or is it merely remixing existing ideas? And as AI-generated content becomes more prevalent, distinguishing between human-made and AI-generated work becomes crucial for authenticity. - Scale of Compute Infrastructure:

Training state-of-the-art generative models requires vast computational resources. Models like GPT-3.5 or StyleGAN demand significant capital investment in GPUs and other infrastructure. This often limits cutting-edge AI research to well-funded organizations, potentially stifling innovation from individual researchers or smaller institutions. - Sampling Speed:

While generative models can produce high-quality outputs, the time they take, especially in real-time or interactive scenarios, can be a bottleneck. Achieving low-latency results without compromising on quality remains a challenge. - Data Quality and Licensing:

Generative models are only as good as the data they’re trained on. Ensuring high-quality, unbiased data is paramount. However, sourcing such data, especially in niche domains, is challenging. Furthermore, licensing issues arise when using third-party datasets, complicating the training process.

The Future of Generative AI: A Confluence of Trends, Impacts, and Integration

Ref: Freepik

Gartner, a global research and advisory firm, offers some compelling insights into the trajectory of generative AI. Their predictions suggest a rapid adoption and integration of generative AI into enterprise applications:

- By 2024, the landscape of enterprise applications will undergo a seismic shift, with 40% embedding conversational AI, a significant leap from the modest 5% in 2020.

- The year 2025 will witness a six-fold increase in enterprises implementing AI-augmented development and testing strategies, rising from 5% in 2021 to 30%.

- Fast forward to 2026, and the design landscape for new websites and mobile apps will be revolutionized. Generative design AI will automate a staggering 60% of the design effort, streamlining the creation process.

- Soon, the lines between human and machine collaboration will blur further, with over 100 million humans working alongside robocolleagues, enhancing productivity and fostering innovation.

- By 2027, the automation capabilities of generative AI will reach unprecedented levels. Nearly 15% of new applications will be autonomously generated by AI, eliminating the need for human intervention—a phenomenon that’s virtually non-existent today.

Ref: Freepik

The generative AI marketplace is burgeoning, with both tech giants and niche players vying for dominance. Beyond the major platform players like Google, Microsoft, Amazon, and IBM, a plethora of specialty providers, backed by significant venture capital, are making waves. These entities are not only advancing the state of generative AI but also integrating it with other AI branches, leading to holistic, integrated AI systems.

For instance, Google’s large language models, Palm and Bard, are being seamlessly integrated into their suite of workplace applications, making generative AI accessible to millions. Microsoft, in collaboration with OpenAI, is embedding generative AI into its products, with the added advantage of the buzz surrounding ChatGPT. Amazon’s partnership with Hugging Face and its ventures like Bedrock and Titan exemplify the fusion of generative AI with cloud computing and enhanced search capabilities. IBM, with its array of foundation models, showcases the potential of fine-tuning generative AI models for specific enterprise needs.

Conclusion: The Dawn of a Generative Era

The widespread adoption of generative AI will undoubtedly bring transformative benefits, from enhancing productivity to fostering innovation. However, with great power comes great responsibility. The potential for misuse, especially in areas like AI-generated media or unauthorized content generation, necessitates robust regulatory frameworks. Societies will grapple with questions of authenticity, copyright, and the ethical implications of AI-generated content. Regulatory bodies will play a pivotal role in ensuring that the deployment of generative AI aligns with societal values, ethics, and legal standards.

The future of generative AI is not just about advanced algorithms or sophisticated models – it’s about the confluence of technology, society, and ethics. Generative AI models and systems are not just tools; they are catalysts, reshaping industries, redefining creativity, and reimagining the boundaries of human-machine collaboration.