Prompt engineering is a fascinating new frontier in the world of AI that is rapidly gaining momentum as the world at large awakens to the potential of LLMs. Research in the field of prompt engineering has exponentially ramped up in the last couple of years since consumer applications such as ChatGPT have taken the Internet by storm.

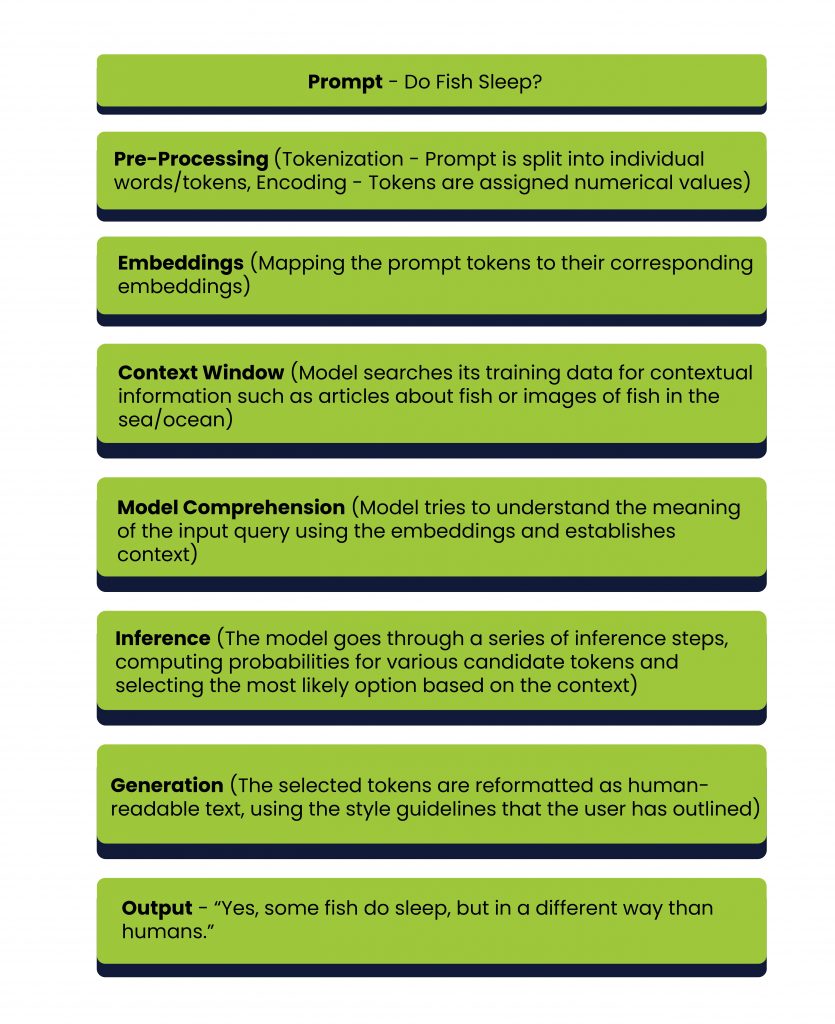

Prompt engineering refers to the art and science of querying foundation models like LLMs (Large Language Models) with the right inputs to achieve a desired output.

Whether you want to understand a research paper, translate text from a foreign language or write code to build your own version of Angry Birds, prompt engineering is the key to unlocking new insights and getting answers to previously unsolvable problems.

Since LLMs are somewhat like a “black box” from the outside, prompting them in the right way is essential for success.

In this article, we will explore the principles, techniques, and best practices for prompt engineering to help you get the best out of modern-day AI tools and prepare yourself for this brave new world. Let us begin.

What is Prompt Engineering?

Imagine you have Tony Stark’s J.A.R.V.I.S. as your personal assistant – it is super smart and knows a lot about every conceivable topic under the sun. But sometimes, it doesn’t give you the exact answer you’re looking for (or gives you the wrong answer) when you ask it a question. This is where prompt engineering comes in. Prompt engineering involves giving your omniscient robot assistant a special set of instructions or guidelines to help it understand better the task that needs to be done.

Prompt engineering plays a crucial role in enhancing the capabilities of LLMs across a wide range of tasks, from question answering to arithmetic reasoning. It involves the skillful design and development of prompts and the utilization of various techniques to interact effectively with LLMs and other tools.

However, prompt engineering goes beyond simply crafting prompts. It encompasses a diverse set of skills and methodologies that enable researchers and developers to interface with LLMs more effectively and understand their capabilities. It is a valuable skill set for improving the safety and reliability of LLMs, as well as for expanding their functionalities by incorporating domain knowledge and integrating external tools.

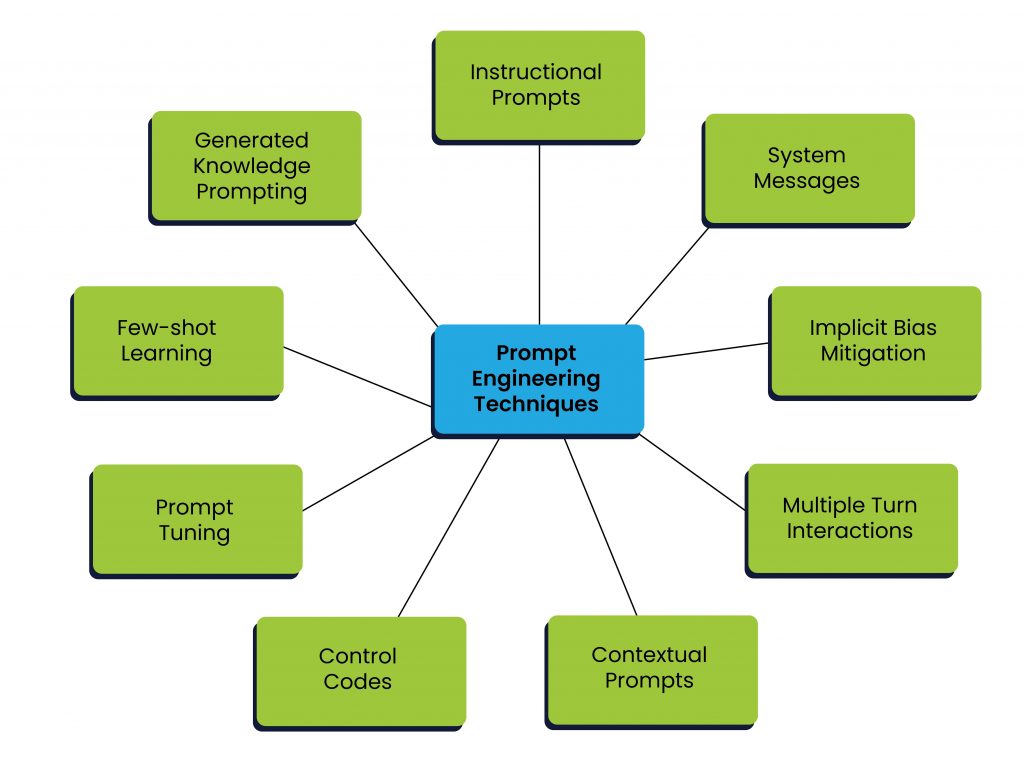

Prompt Engineering Techniques

Prompt Engineering is a rapidly evolving field of research, with a rapid influx of techniques and best practices brought about by the widespread adoption of foundation models and LLMs across a broad range of industries and disciplines. Let’s talk about some of them.

- Instructional Prompts: These prompts provide explicit instructions to guide the behavior of the language model. By specifying the desired format or task, the model can generate responses that align with the instructions. Instructional prompts help to focus the model’s output and encourage it to produce relevant and accurate responses.

| Example: “Write a persuasive essay arguing for or against the use of renewable energy sources.” In this prompt, the model is directed to generate an essay that takes a position on renewable energy and presents arguments to support that position. |

- System Messages: System messages are used to set the role or context of the language model. By providing a clear persona or context, the model’s responses can be tailored accordingly. This technique helps create a conversational framework that guides the model’s behavior and ensures more coherent and relevant replies.

| Example: “You are an AI language model with expertise in psychology. Offer advice to a person struggling with anxiety.” |

Here, the system message establishes the model as a knowledgeable psychologist, enabling it to provide appropriate advice in response to a user seeking help with anxiety.

- Contextual Prompts: Contextual prompts include relevant information or preceding dialogue to provide the model with the necessary context for generating responses. By including the context, the model can better understand the user’s intent and produce more contextually appropriate and coherent replies.

| Example: Prompt: “User: Can you recommend a good restaurant in Paris?” |

Within the user’s query, the model is given the necessary context to offer a relevant and helpful recommendation for a restaurant in Paris.

- Implicit Bias Mitigation: Prompt engineering can be employed to reduce potential biases in the language model’s responses. By carefully crafting prompts and avoiding leading or biased language, efforts can be made to encourage fair and unbiased outputs. This technique aims to promote inclusive and objective responses from the model.

| Example: Biased prompt: “Why are all teenagers so lazy?” Unbiased prompt: “Discuss the factors that contribute to teenage behavior and motivation.” |

By reframing the prompt to avoid generalizations and stereotypes, the model is guided to provide a more balanced and nuanced response.

- Multiple-turn Interactions: This technique involves engaging in a conversation with the model through multiple turns or exchanges. By building a back-and-forth dialogue, each turn can provide additional context, allowing the model to generate more coherent and contextually aware responses.

| Example: Turn 1: “User: What are the top tourist attractions in New York City?”

<model output> Turn 2: “User: Thanks! Can you also suggest some popular Broadway shows? “ |

This multi-turn interaction allows the model to understand the user’s follow-up question and respond with relevant suggestions for Broadway shows in New York City.

- Control Codes: Control codes are used to modify the behavior of the language model by specifying attributes such as sentiment, style, or topic. By incorporating control codes, prompt engineering can achieve more fine-grained control over the model’s responses, enabling customization for specific requirements.

| Example: “[Sentiment: Negative] Express your disappointment regarding the recent product quality issues.” |

By including the sentiment control code, the model is directed to generate a response that expresses negative sentiment about the mentioned product quality issues.

- Prompt Tuning: Prompt tuning is an efficient and effective technique used to adapt large-scale pre-trained language models (PLMs) to downstream tasks. It involves freezing the PLM and focusing on tuning soft prompts, which are learned through backpropagation and can incorporate signals from labeled examples. Here are some examples showcasing the utility of prompt tuning:

|

In each of these examples, prompt tuning empowers users to adapt a pre-trained language model to a specific task by providing a suitable prompt. This approach saves time and computational resources compared to fine-tuning the entire model.

- Few-shot Learning: Few-shot learning involves training language models with limited examples to adapt to specific tasks or domains. By providing a small number of examples, prompt engineering enables the model to generalize and apply the learned knowledge to similar scenarios. This technique is useful when there is a scarcity of data for a particular task or when fine-tuning is needed for specific use cases.

| Example: Training a language model on a few dialogue samples from a movie allows it to capture the style, speech patterns, and personality of a particular character. The few-shot learning approach enables the model to generate responses that are consistent with that character’s traits and behavior. |

- Generated Knowledge Prompting: Generated knowledge prompting is a powerful technique that harnesses the AI model’s capacity to generate knowledge for solving specific tasks. By presenting demonstrations and guiding the model toward a particular problem, we can leverage the AI’s ability to generate relevant knowledge that aids in answering the task at hand.

Consider the following examples:

|

Working Within the Token Limits of LLMs

LLMs operate within a “budget” of tokens, representing the number of words or units of information in each interaction. This budget includes both the tokens in the prompt and the tokens in the response. Prompt engineers must carefully consider these limits to ensure optimal utilization of the model’s capacity while avoiding exceeding the set boundaries.

Different LLMs may have varying token limits, and it is crucial to be aware of the specific limitations of the model you are working with. For example, OpenAI’s GPT-3 model has a maximum token limit of 4096 tokens, while other models like GPT-2 may have lower limits, such as 1024 tokens. These limits dictate the length of the prompt and response that the model can handle. Exceeding these limits can result in truncation or incomplete outputs, compromising the coherence and quality of the generated responses.

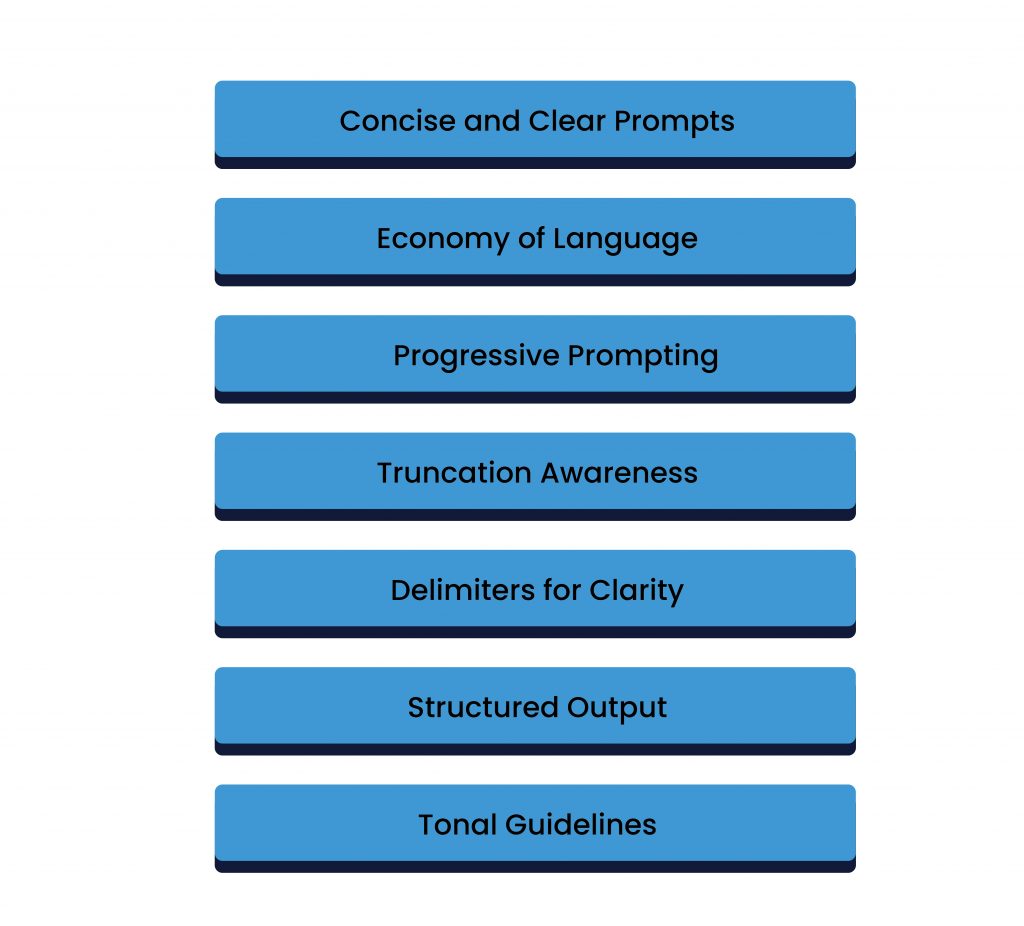

Best Practices for Building Effective Prompts

Prompt engineering is both an art and a science. It requires a delicate balance of brevity, clarity, and adaptability to ensure a seamless interaction that optimizes the available token budget. As with all scientific pursuits, you will have to constantly refine your approach, objectively evaluate feedback and adapt to the nuances of the specific AI Model you are working with to deliver the best results.

That being said, there is a general set of best practices that you can employ to ensure your prompt engineering process is primed for success.

- Concise and Clear Prompts: Craft prompts that are succinct and clear, conveying the necessary information while minimizing unnecessary verbiage.

- Economy of Language: Promote an economy of language in both prompts and responses.

- Progressive Prompting: For lengthy or multi-step tasks, prompt engineers can break down the instructions or requests into smaller, sequential prompts.

- Truncation Awareness: Prompt engineers should be mindful of the potential truncation of input and output when approaching the token limits of the model they are working with.

- Delimiters for Clarity: Use angle brackets (<>), opening and closing tags (<code></code>), and other delimiters to clearly demarcate different portions and subsections of your input.

- Structured output: Requesting the model for output in a structured format like bulleted lists, JSON or HTML makes it easier to integrate the LLM output with other applications/processes.

- Tonal Guidelines: You can instruct the model to have a specific tone when generating its output, such as ‘creative’ or ‘conversational’ depending upon your use case.

What is the future of Prompt Engineering?

With the rapid evolution of AI technology, coupled with the growing demand for sophisticated language models, the field of prompt engineering is poised for an exciting journey ahead. Here are a few scenarios we are likely to see soon.

- Prompt Marketplaces will Emerge: Just as app stores revolutionized the way we access and distribute software; the future of prompt engineering may witness the rise of prompt marketplaces. These marketplaces will serve as platforms where prompt engineers can showcase their expertise, offering a diverse range of pre-designed prompts tailored for specific tasks and industries. Such marketplaces will not only simplify the prompt engineering process but also foster collaboration and knowledge sharing within the community.

- Prompt Engineers will be in demand: With the increasing reliance on AI models and the pivotal role of prompts in shaping their behavior, prompt engineering will emerge as a sought-after profession. As organizations recognize the significance of fine-tuning language models for specific applications, prompt engineers will be in high demand, armed with the expertise to craft effective prompts, optimize model outputs, and ensure seamless integration of AI systems into various domains. The role of a prompt engineer will be seen as a critical bridge between AI models and real-world applications.

- Enhanced Prompt Design Tools: The future of prompt engineering will witness the development of sophisticated tools and platforms that empower prompt engineers to create, refine, and experiment with prompts effortlessly. These intuitive interfaces will provide advanced features like real-time model feedback, prompt optimization suggestions, and visualization of prompt-engine model dynamics. With user-friendly design tools at their disposal, prompt engineers will enjoy enhanced productivity and creativity, accelerating the pace of innovation.

Prompt engineering is poised to become an indispensable component of AI development, where industry experts craft nuanced prompts to unlock the potential of language models. By embracing the intricacies of prompt engineering, we can usher in a new era of intelligent interactions and transformative problem-solving.