There are only three AIs everyone talks about. HAL 9000, Samantha from Her, and Terminator’s Skynet. They are all from movies and shape how people think about AI. They limit our imagination, not expand it. But GPT-4o is different. It’s not science fiction. It’s real, and it’s here.

GPT-4o talks, listens, and understands. You can have a conversation with it, and it will respond in real-time. It’s not perfect, but it’s the best we have, and it’s only going to get better.

This feels like the future of AI, and it is just the beginning. And it is a future where AI and humans work together, communicate seamlessly, and push the boundaries of what is possible.

It’s time to let go of the movie tropes and embrace reality.

GPT-4o’s Unprecedented Capabilities

GPT-4o, short for “GPT-4 omni” is not just another incremental improvement in AI technology. It represents a significant step towards more natural and seamless human-computer interaction. What sets GPT-4o apart is its ability to accept and generate a wide range of input and output modalities, including text, audio, image, and video.

Imagine having a conversation with an AI that feels as natural as talking to another person. With GPT-4o, this is closer to reality than ever before. The model can respond to audio inputs in as little as 232 milliseconds, with an average response time of 320 milliseconds. This is comparable to the response time of a human in a conversation. Its ability to process and generate audio in real time enables more fluid and natural interactions between humans and computers.

But GPT-4o’s capabilities go beyond just audio processing. It is a true multimodal AI that can handle any combination of text, audio, image, and video inputs and generate appropriate outputs in text, audio, and image formats. This versatility opens up many possibilities for interacting with and using AI technology.

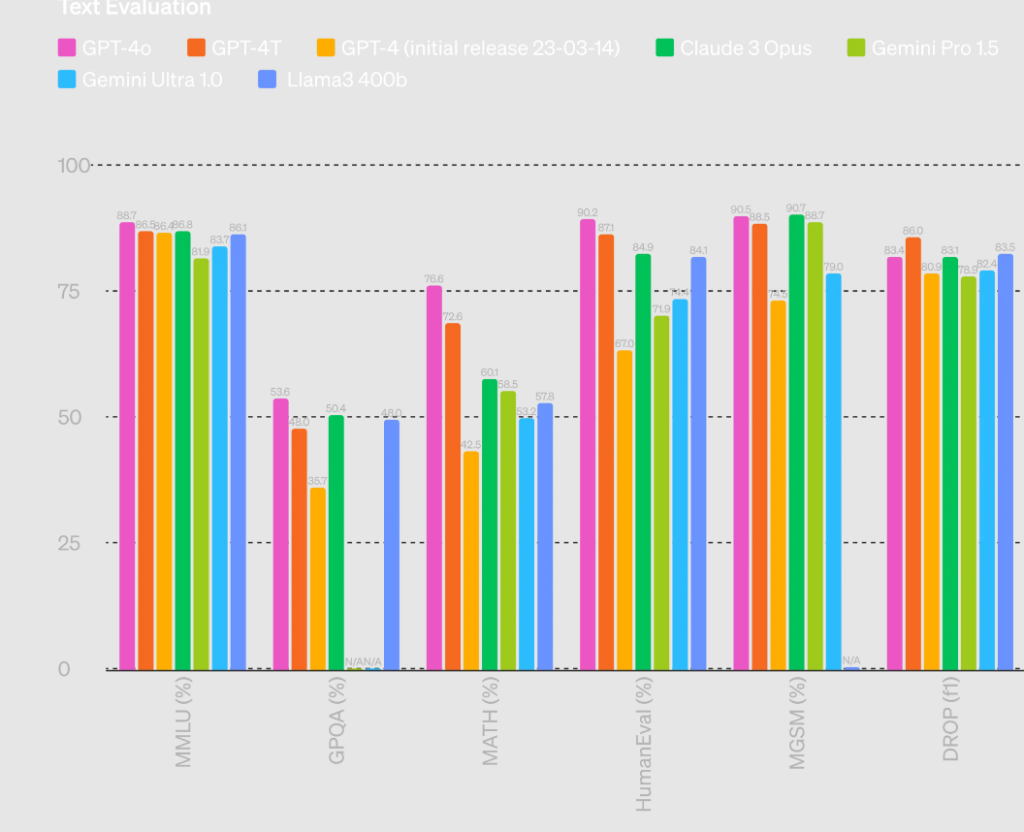

In terms of its performance, GPT-4o is a powerhouse. It matches the performance of GPT-4 Turbo, one of the most advanced language models to date, in English text and code processing. But where GPT-4o really shines is in its handling of non-English languages. It shows significant improvements over previous models in its ability to understand and generate text in a wide range of languages.

Moreover, GPT-4o achieves this impressive performance while being much faster and more cost-effective than its predecessors. It can process and respond to inputs 50% cheaper through its API, making it more accessible and practical for a wide range of applications.

Key Capabilities:

- Improved Reasoning: GPT-4o showcases its advanced reasoning abilities by setting new high scores on challenging benchmarks. It achieves an impressive 88.7% on the 0-shot COT MMLU, which tests general knowledge questions. Additionally, on the traditional 5-shot no-CoT MMLU, GPT-4o raises the bar with a score of 87.2%. These evaluations were conducted using the new simple evals library, demonstrating GPT-4o’s exceptional performance in reasoning tasks.

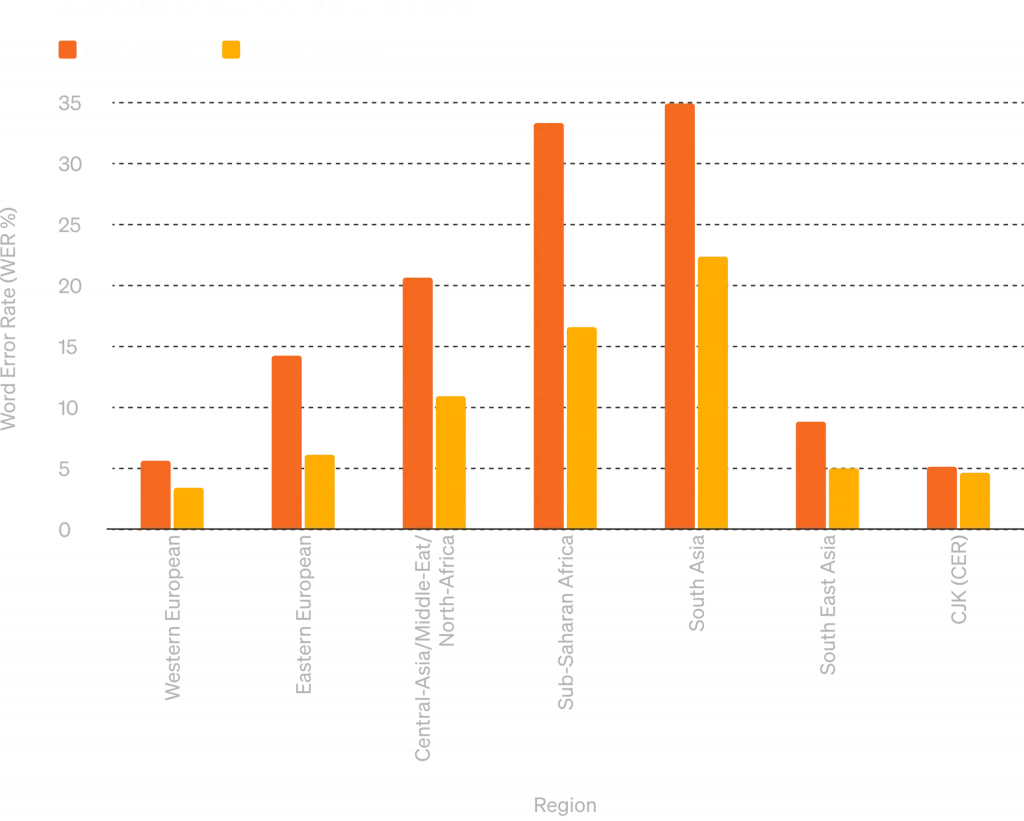

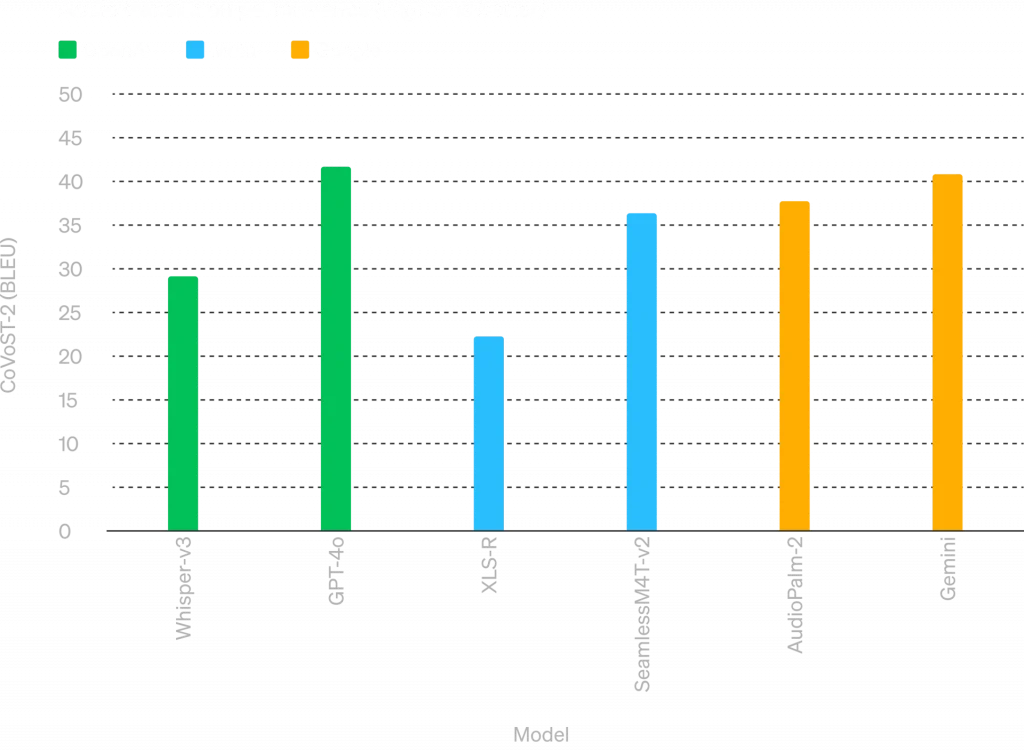

- Enhanced Audio Speech Recognition: GPT-4o significantly improves upon the speech recognition capabilities of its predecessor, Whisper-v3. This advancement is particularly notable across all languages, with substantial enhancements in lower-resourced languages. The ability to accurately transcribe and understand spoken language is a testament to its versatility and potential for real-world applications.

- State-of-the-Art Audio Translation: In addition to its superior speech recognition, GPT-4o sets a new audio translation standard. It outperforms Whisper-v3 on the MLS benchmark, showcasing its ability to effectively translate spoken language across various languages. This capability opens up new possibilities for breaking down language barriers and facilitating global communication.

- Multilingual and Visual Understanding: GPT-4o demonstrates its prowess in both multilingual and visual understanding through the M3Exam benchmark. This evaluation consists of multiple-choice questions from standardized tests in different countries, often including figures and diagrams. GPT-4o outperforms GPT-4 across all languages on this benchmark.

The new tokenizer significantly reduces the number of tokens required to process text across a wide range of languages, from Gujarati and Telugu to Spanish and French. For example, Gujarati text now requires 4.4 times fewer tokens, while Arabic and Persian see a 2-fold and 1.9-fold reduction, respectively. Even for languages like English, where tokenization was already relatively efficient, GPT-4o manages to achieve a 1.1-fold improvement. These enhancements in tokenization contribute to GPT-4o’s ability to handle multiple languages more effectively and with less computational overhead compared to its predecessors.

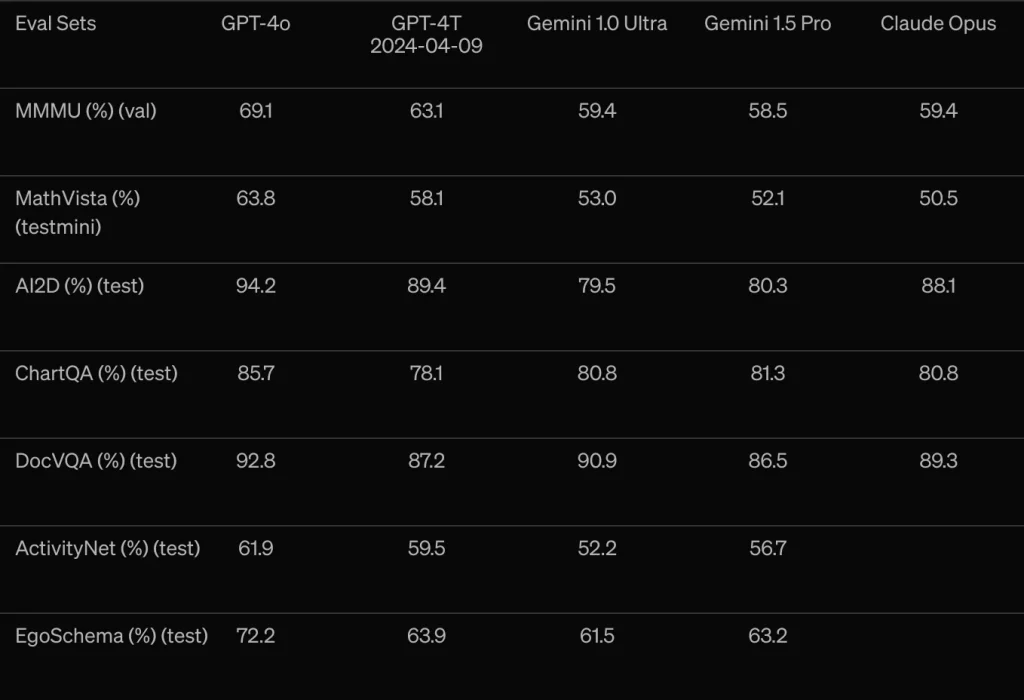

- Cutting-Edge Vision Understanding: GPT-4o achieves state-of-the-art performance on visual perception benchmarks, further highlighting its multimodal capabilities. All vision evaluations are conducted in a 0-shot setting, with GPT-4o excelling on benchmarks such as MMMU, MathVista, and ChartQA using 0-shot CoT (Chain-of-Thought) prompting.

Exploring GPT-4o’s Capabilities and Use Cases

The announcement of a model as malleable as GPT-4o brings a range of exciting possibilities and use cases to mind. Let’s discuss a few important ones.

Natural human-computer interaction

- Real-time audio response

GPT-4o’s real-time audio response capabilities are set to revolutionize the global travel industry. The AI can act as a live voice translator, serving as a middleman between people speaking different languages. This feature allows for seamless communication, enabling interruptions and live voice-to-voice interaction, which is a significant improvement over existing translation tools. With LLMs like these, language barriers will eventually no longer hinder international travel and business communication.

- Emotional expression and tone recognition

GPT-4o takes human-computer interaction to a new level with its ability to recognize emotional expressions and tones. The AI can detect emotions from selfies, prompting users to share the reason behind their smiles or frowns. This feature creates a more engaging and personalized user experience. Additionally, GPT-4o can generate dramatic and emotional voice outputs, making the interaction feel more natural and human-like. This capability has the potential to enhance virtual assistants and customer service applications, providing a more empathetic and understanding interface for users.

Specific applications and demonstrations

- Live translation tool

During a demo, OpenAI showcased GPT-4o’s impressive live translation capabilities. The AI was able to translate from Italian to English and vice versa in real-time, enabling smooth communication between speakers of different languages. This feature has the potential to greatly impact the travel industry, breaking down language barriers for tourists and business travelers alike. With GPT-4o, travelers can easily navigate foreign countries, communicate with locals, and engage in business negotiations without the need for human translators.

- Vision capabilities

The model’s vision capabilities open up a world of possibilities for interactive learning and personal assistance. The AI can see through the camera on your phone, providing feedback and information on graphs, equations, and even recognizing and commenting on the user’s outfit. This feature can be particularly useful in educational settings, where GPT-4o can help students better understand complex concepts and provide instant feedback on their work. Its vision capabilities can offer personalized fashion advice and help users navigate unfamiliar environments.

- Educational use cases

GPT-4o has the potential to transform the way we learn and teach. The AI can analyze code and provide feedback, helping students learn programming languages more effectively. It can also assist in solving equations step-by-step, acting as a virtual tutor. This feature can make learning more accessible and engaging, especially for students who struggle with traditional teaching methods. With it by their side, students can receive personalized attention and guidance, ensuring they fully grasp the material before moving on to more advanced topics.

- Accessibility features

GPT-4o’s vision capabilities have significant implications for accessibility. The AI can describe surroundings for visually impaired users, providing them with a better understanding of their environment. This feature can potentially integrate with smart glasses, offering real-time audio descriptions of the world around them. This may enable visually impaired individuals to navigate public spaces more independently, engage in social interactions more effectively, and access information that was previously unavailable to them.

Comparison to other voice assistants

GPT-4o stands out from other voice assistants like Siri, Alexa, and Google’s Gemini in several key aspects. Its advanced natural language processing capabilities enable more natural and contextual conversations, making the interaction feel more human-like. GPT-4o’s real-time translation feature is a game-changer, allowing users to communicate across language barriers effortlessly. Moreover, the AI’s emotion recognition capabilities add a layer of empathy and understanding to the user experience, setting it apart from more traditional voice assistants.

The Future with GPT-4o and Beyond

As we stand on the cusp of a new era in artificial intelligence, we must consider the potential impact of models like GPT-4o and their successors on humanity’s future.

Potential Benefits:

- Breaking down language barriers: GPT-4o and its successors could enable seamless, real-time translation across hundreds of languages, fostering greater understanding and collaboration among people from different cultures and backgrounds. This has profound implications for international business, diplomacy, and education, helping to create a more connected and empathetic world.

- Democratizing learning and personalized tutoring: Advanced language models could provide students from all walks of life access to virtual teachers that adapt to their individual learning styles, offer instant feedback, and provide guidance in various subjects. This could help bridge educational inequalities and empower learners around the globe to reach their full potential.

- Enhancing mental health support: The emotional intelligence and empathy demonstrated by models like GPT-4o could pave the way for more supportive and understanding mental health resources. Virtual therapists and counselors powered by these advanced AI systems could offer 24/7 support to individuals struggling with mental health issues, providing a safe and non-judgmental space for them to express their thoughts and feelings.

While not replacing human mental health professionals, these AI-powered resources could serve as a valuable complement, helping to destigmatize mental health issues and ensure that everyone has access to the support they need.

Potential Risks and Challenges

- More convincing misinformation: As language models become more sophisticated, it may become increasingly difficult to distinguish between human-generated and AI-generated content. Malicious actors could exploit this to manipulate public opinion and sow discord, posing a significant societal challenge.

- Impact on the job market: With AI systems becoming more adept at tasks traditionally performed by humans, such as writing, translation, and analysis, there is a risk of job displacement across various industries. While some argue that these technologies will also create new job opportunities, it is crucial to ensure that the benefits of AI are distributed fairly and that workers are supported in acquiring the skills necessary to thrive in a rapidly evolving economy.

- Privacy and data security concerns: As models like GPT-4o become more advanced and are applied to a wider range of applications, it will be essential to develop robust safeguards and regulations to protect individuals’ personal information and prevent the misuse of these powerful tools. Shaping the Future:

- Proactively addressing risks: To harness the power of AI for the greater good, it is crucial to proactively address the potential risks and challenges associated with advanced language models. This involves developing and implementing ethical guidelines, fostering interdisciplinary collaboration, and engaging in open dialogue with stakeholders from various sectors of society.

Conclusion

GPT-4o is a tool, a marvel, and a challenge all at once.

It can break barriers or deceive, educate, or displace. As we stand at this crossroads, we must choose to harness AI for the greater good to shape a future where technology serves humanity. The journey will be challenging, but we must remain committed to ethics, equality, and preserving what makes us human.