In our world, AI has grown out of sci-fi tales into the fabric of daily life.

At Harvard, scientists crafted a learning algorithm, SISH, a tool sharp as a scalpel in the vast anatomy of data. It finds diseases hidden like buried treasure, promising a new dawn in diagnostics. This self-taught machine navigates through the dense forests of pathological images to reveal rare diseases.

Yet, this same technology, when aimed at simplifying healthcare, sometimes mirrors our oldest biases. It sometimes equates spending with necessity, missing the stark realities of racial inequality. This oversight is not just a failure of technology but a reflection of our societal flaws.

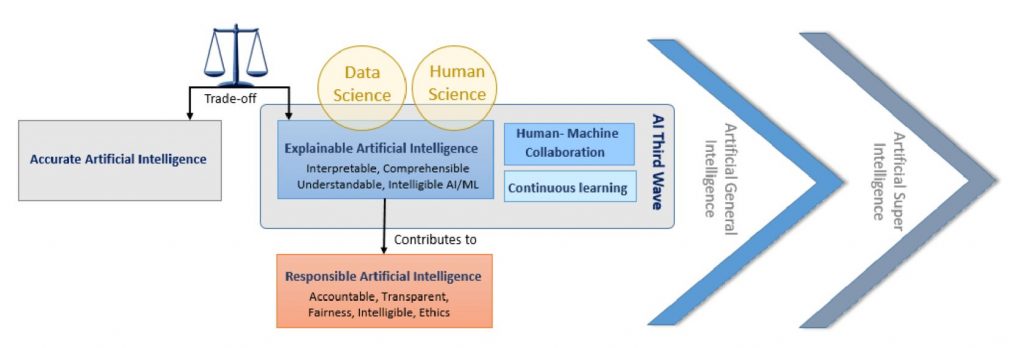

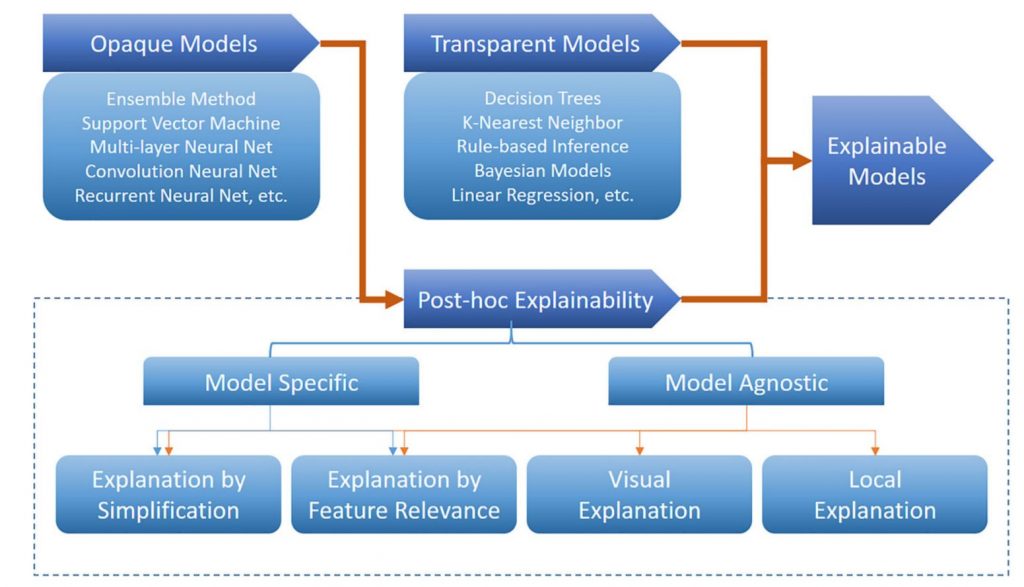

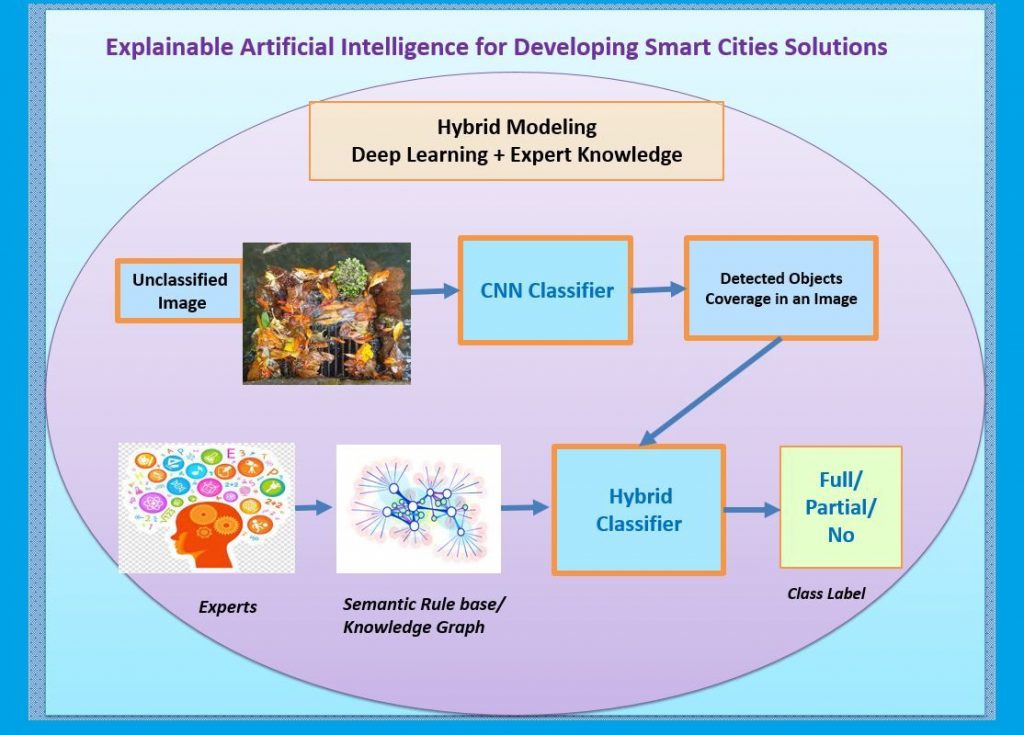

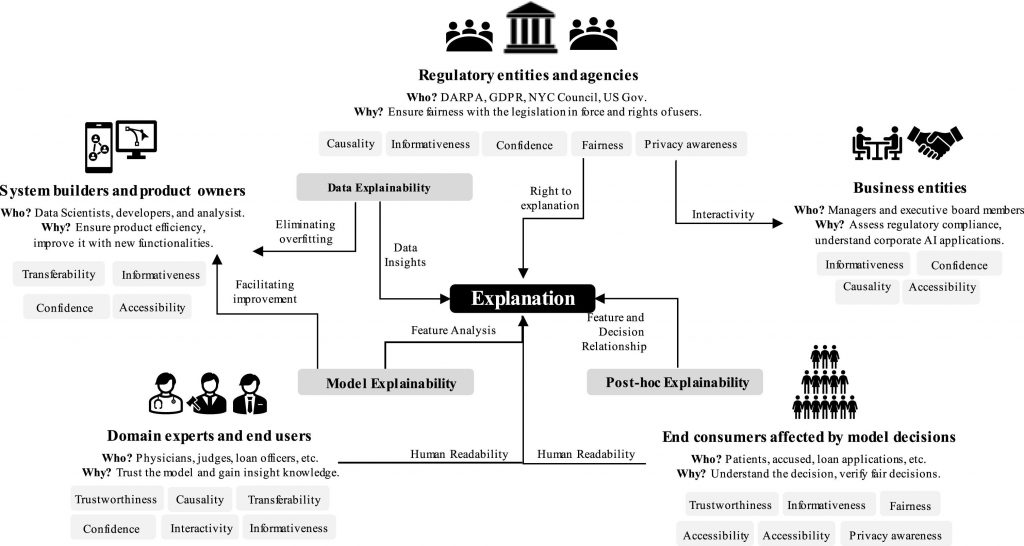

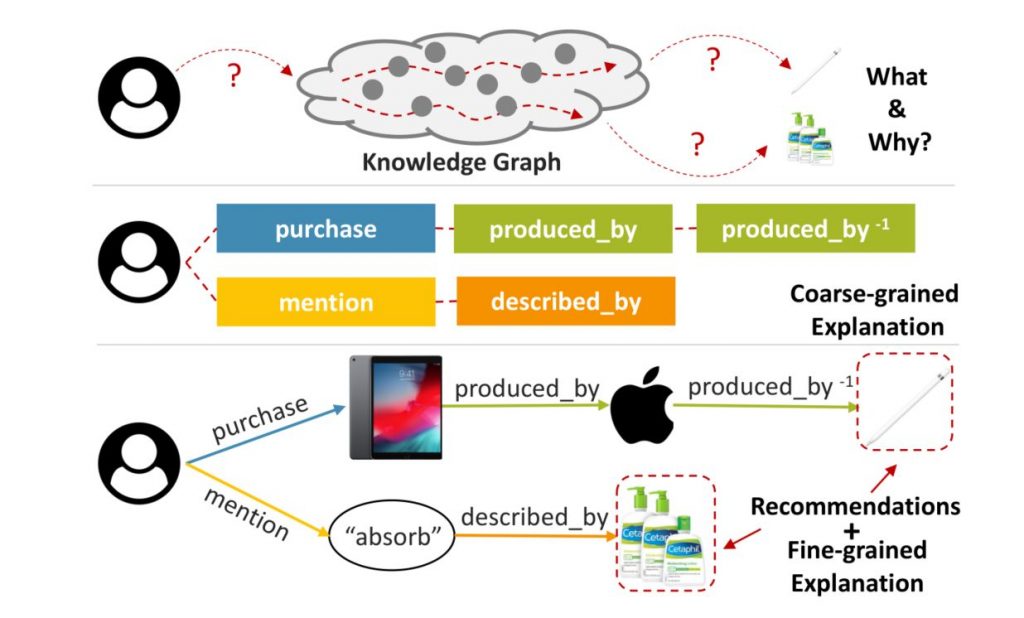

A schematic view of XAI-related concepts

We stand at a crossroads with these two tales of AI. One promises salvation, the other, perpetuation of past injustices.

The question that looms large is one of understanding—can we ever truly know the mind behind the machine?

This is where the story of Explainable AI begins—a quest for clarity, for a future where AI’s workings are laid bare. In this article, we will seek to uncover the workings of these silent giants and bridge the divide between human judgment and algorithmic precision.

The Need for Explainable AI

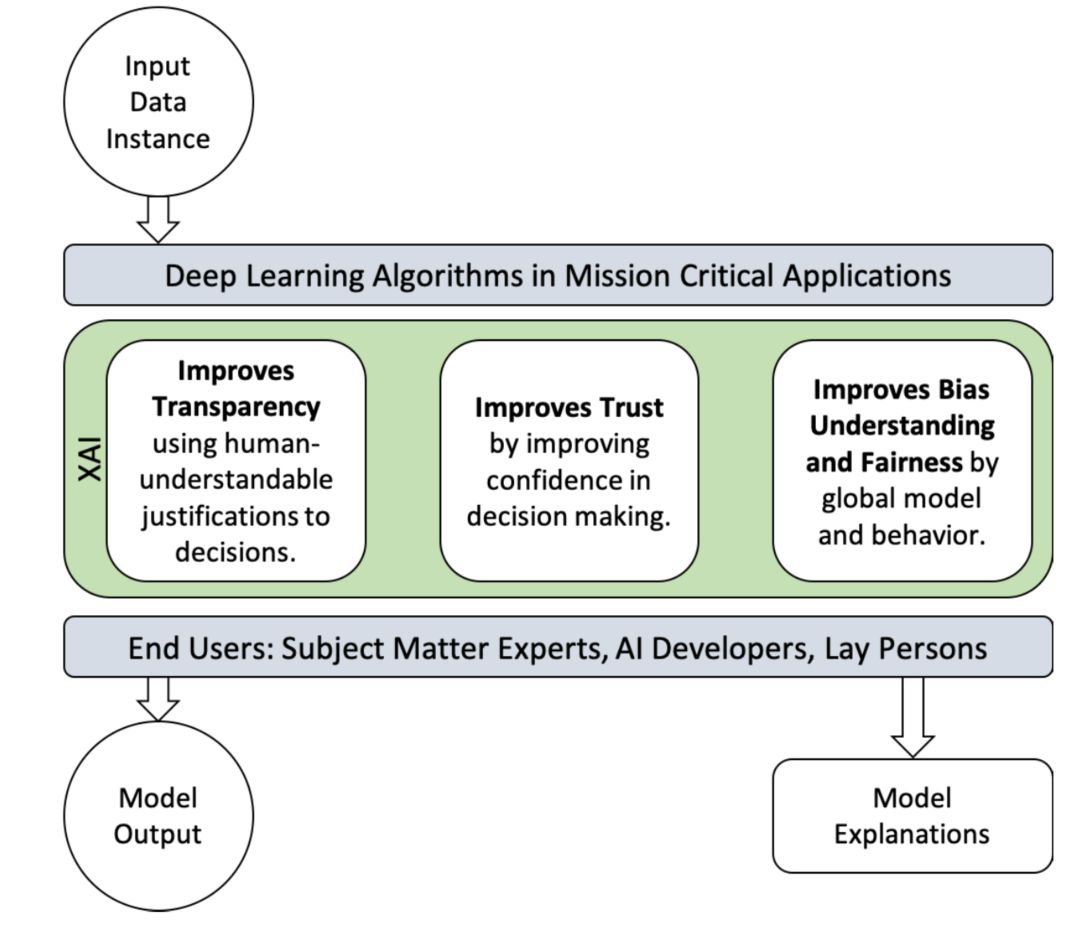

The demand for explainability is growing in the modern world, where AI plays an increasingly significant role. It is about building trust, transparency, and accountability between AI and its human users.

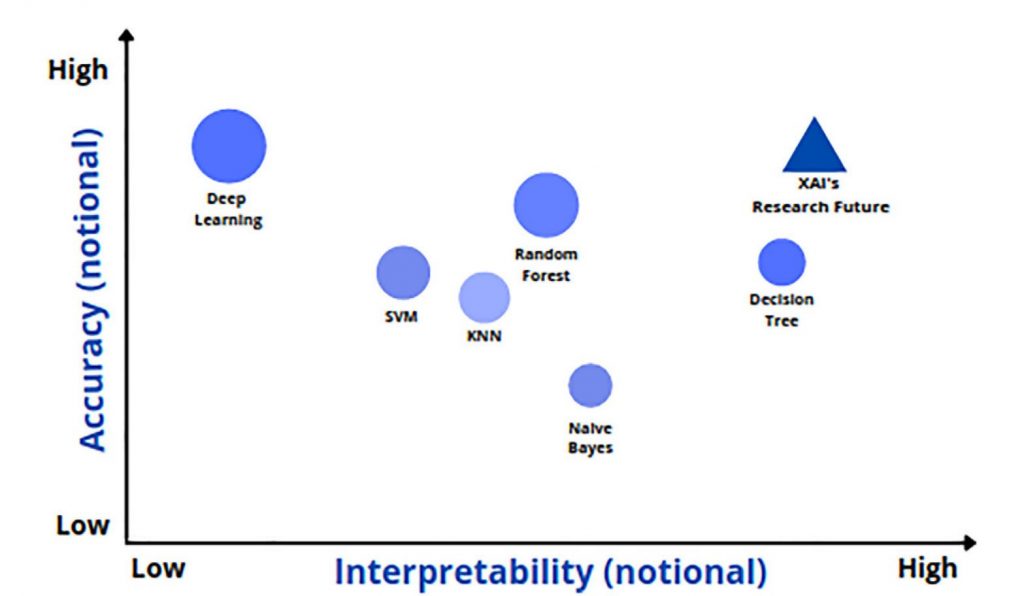

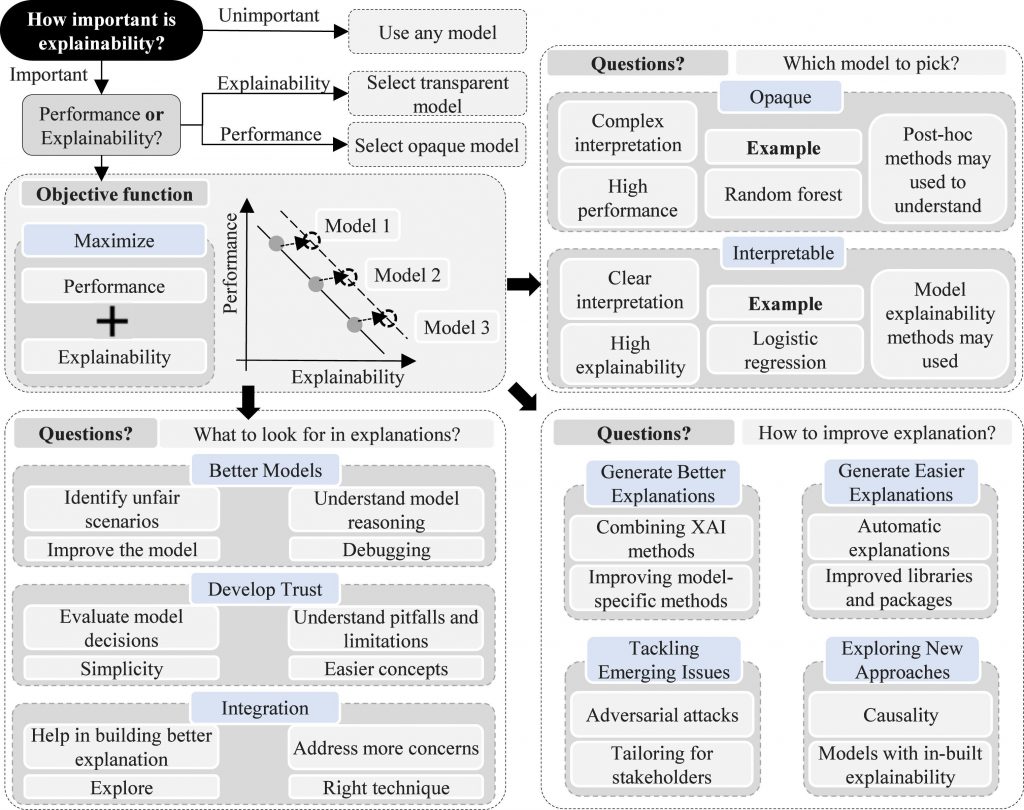

Accuracy vs. interpretability for different machine learning models

Trust and Transparency: The Foundation

From healthcare to finance, AI algorithms are influencing outcomes that have real-world consequences. An AI system might help a doctor diagnose a patient or help a bank decide whether to approve a loan application. Without transparency into how these decisions are made, trust in AI can erode.

Ethical Considerations

AI systems can sometimes perpetuate biases, often unintentionally. A study found that COMPAS, an AI system for predicting criminal recidivism exhibited racial bias, wrongly labeling Black defendants as high-risk at nearly twice the rate of white defendants. XAI can help identify and mitigate these biases by providing a way to scrutinize AI decision-making processes.

Expected improvements when using XAI techniques for decision-making of end-users

Regulatory and Legal Compliance

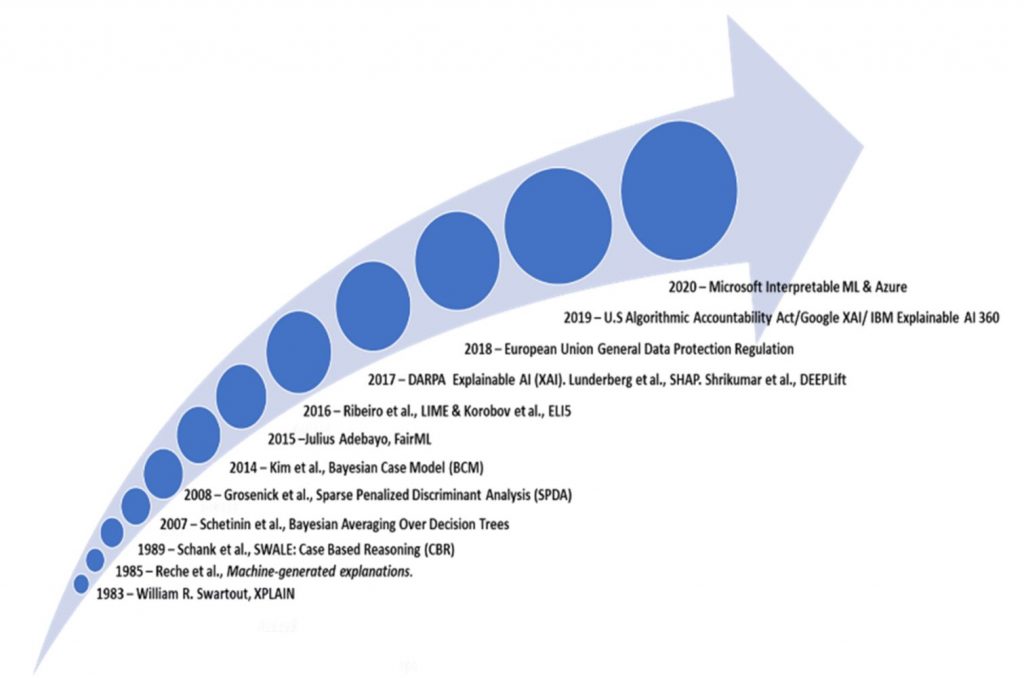

As AI becomes more prevalent, legislators are developing new regulations to govern its use. The European Union’s General Data Protection Regulation (GDPR) and California’s Consumer Privacy Act (CCPA) are just two examples of laws that set standards for data privacy and AI transparency.

Enhancing Research and Development

By providing insight into how AI models make decisions, researchers can identify areas for improvement and develop new, more effective algorithms. This can lead to better AI applications across various industries, from healthcare to environmental science.

Types of Explainable AI

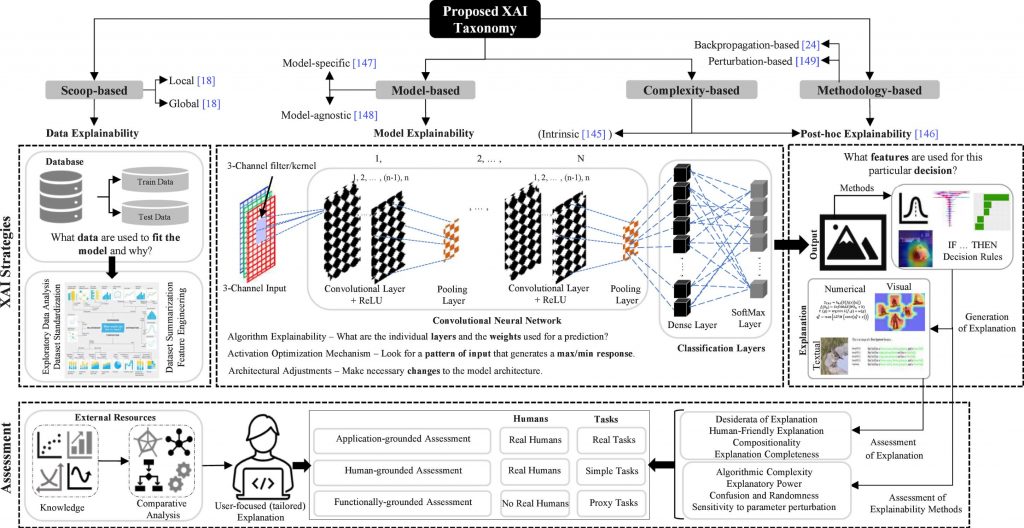

The high-level ontology of explainable artificial intelligence approaches

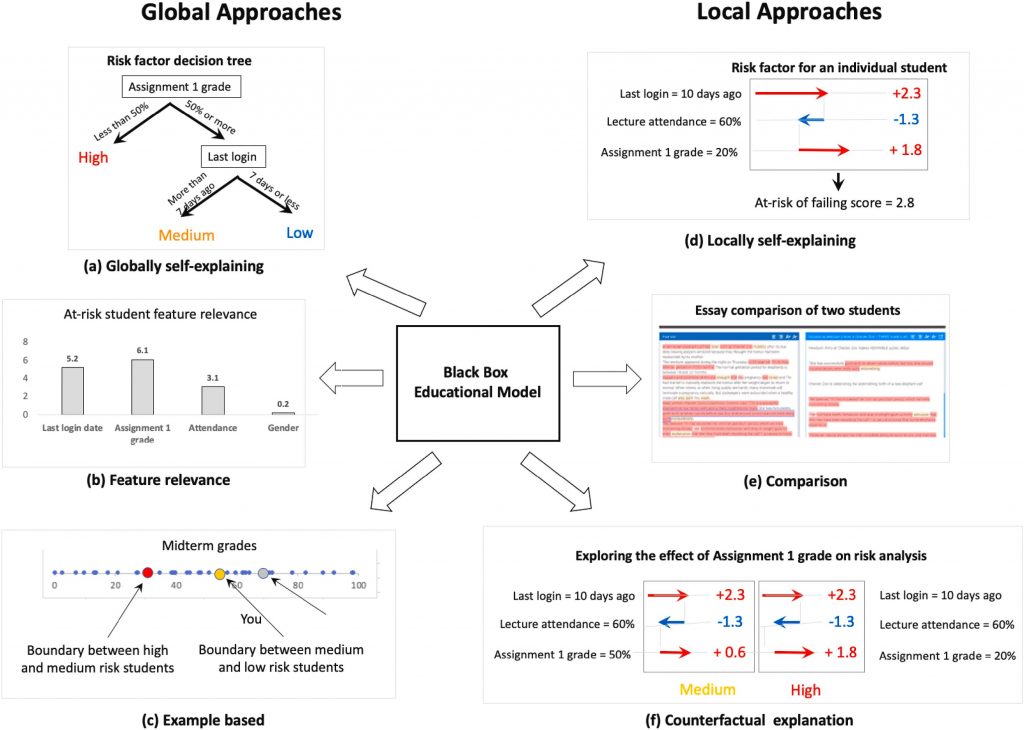

XAI strategies can be broadly categorized into two main groups based on the scope of their explanations: local explainers and global explainers.

Local Explainers

Local explainers focus on providing explanations for individual decisions or predictions made by an AI model. This type of explanation is crucial when the stakes are high for specific decisions, such as diagnosing a patient, approving a loan, or identifying a suspect in a criminal investigation.

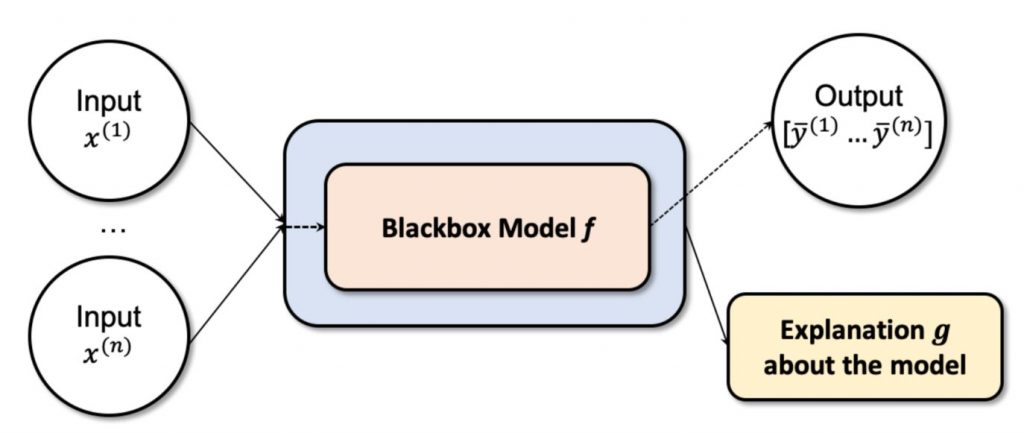

High-level illustration of locally explainable models

Local explanations help users understand why the AI system made a particular decision for a single instance, offering a granular view of the model’s reasoning process. Techniques like LIME (Local Interpretable Model-agnostic Explanations) and SHAP (SHapley Additive exPlanations) are prominent examples that dissect the decision-making process on a case-by-case basis.

Global Explainers

In contrast, global explainers aim to shed light on the overall logic and behavior of an AI model across all possible inputs and scenarios. This perspective is invaluable for developers and stakeholders who seek to validate the model’s generalizability, fairness, and alignment with ethical guidelines.

High-level illustration of a globally explainable algorithm design

Global explanations provide a bird’s-eye view of the model’s decision-making patterns, highlighting how it behaves under various conditions. Techniques that facilitate global explanations often involve model simplification or approximation methods that capture the essence of the model’s logic in a way that is understandable to humans.

Interpretability vs. Explainability

While often used interchangeably, interpretability and explainability in AI have distinct meanings.

Interpretability refers to the extent to which a human can understand the cause of an AI system’s decision. It is about the internal mechanics of AI models being intuitive or at least followable.

Common explainability approaches

Explainability, on the other hand, extends beyond making the model’s workings accessible. It is about providing understandable reasons for the decisions, regardless of the model’s complexity. In essence, an interpretable model is inherently explainable, but complex models, often seen in deep learning, require additional mechanisms (such as feature importance scores or surrogate models) to achieve explainability.

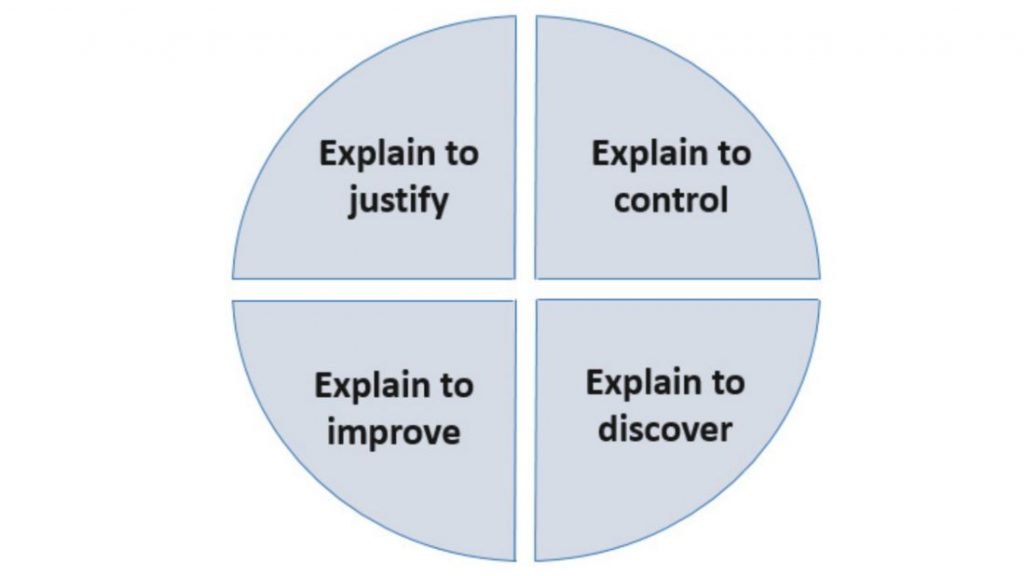

Core Goals of Explainable AI

The development and deployment of Explainable AI (XAI) are guided by core goals that aim to ensure that AI technologies not only advance in capability but also align with ethical standards, safety requirements, and societal needs.

Clarity and Transparency

This means designing AI systems that offer clear insights into their decision-making processes by detailing the model’s architecture to elucidate how inputs are transformed into outputs. Techniques such as feature attribution and decision trees play a crucial role here, allowing users to trace the path of any given decision back to its source data or influencing factors.

Human-Centered and Socially Beneficial Outcomes

XAI For Developing Smart Cities

This involves designing models that consider the impact of decisions on individuals and communities, aiming to enhance human capabilities without displacing or disadvantaging them. For instance, in healthcare, XAI solutions strive to provide diagnostic insights that augment physicians’ expertise, improving patient outcomes while respecting the nuances of individual patient care. Similarly, in urban planning, XAI can help design more efficient and equitable public services, from transportation to resource allocation.

Ensuring Safety and Security

Safety and security are paramount in the deployment of AI systems. By elucidating the operational parameters of AI models, XAI helps identify potential risks and vulnerabilities early in the design phase, allowing for timely mitigations. In autonomous vehicle technologies, for example, explainability aids in understanding the vehicle’s decision-making in critical situations, enhancing passenger safety through transparency.

Responsibility in AI Development and Deployment

Relations between XAI Concepts

This means that developers and organizations are encouraged to take accountability for the AI systems they create, ensuring these technologies are used ethically and beneficially. Responsible AI involves rigorous testing for biases, continuous monitoring for unintended consequences, and the willingness to adjust based on feedback from real-world use. It also entails clear communication with users about how AI systems operate, their limitations, and the rationale behind their decisions.

Techniques and Methods for Achieving Explainability

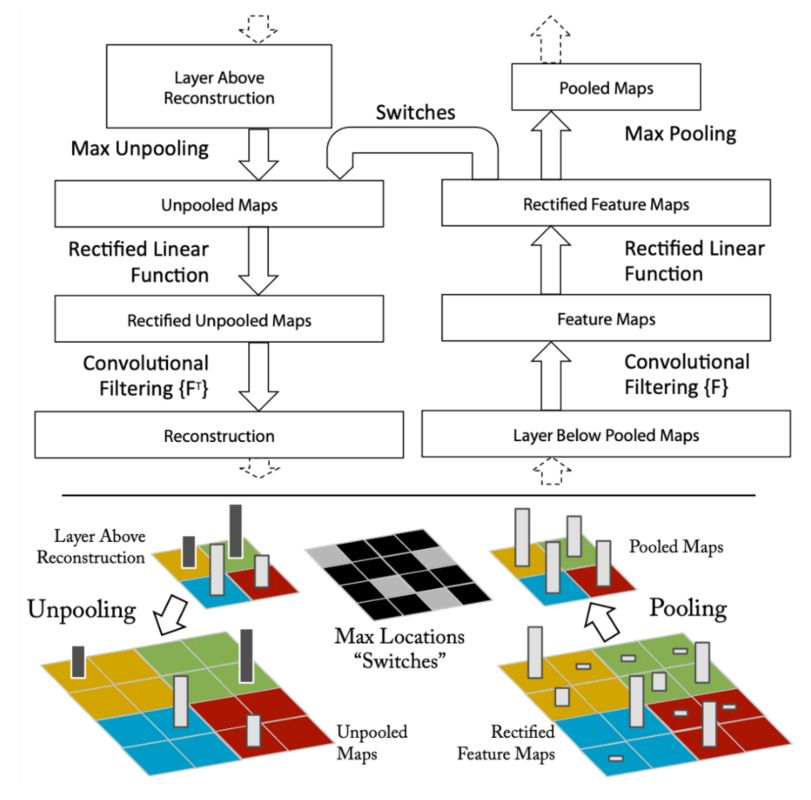

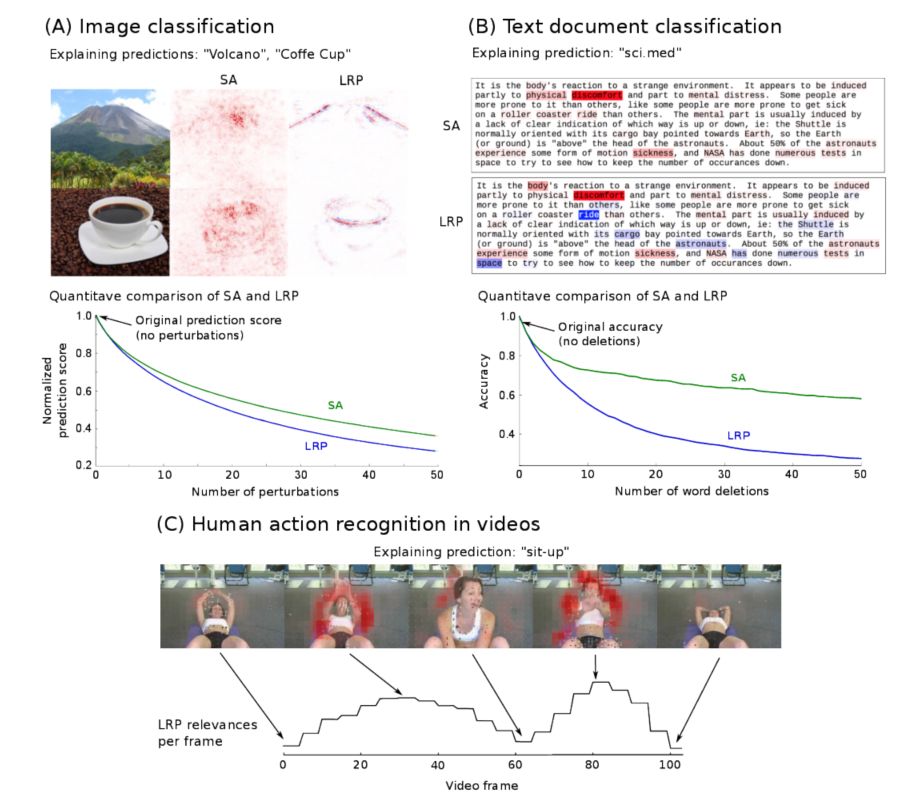

Sample perturbation-based XAI method in Deep Convolutional Network

Achieving explainability in AI involves a multifaceted approach, employing various techniques and methods designed to make AI systems more understandable and their decisions more transparent.

Feature Importance and Weight Assignment

When discussing feature importance and weight assignment, it is essential to understand that these techniques often involve statistical measures that quantify the increase in the model’s prediction error after we shuffle the data values of a particular feature. Tools like Random Forest have built-in methods for evaluating feature importance, which can be crucial for feature selection processes in model development.

Advanced techniques, such as permutation importance, assess the change in prediction accuracy or loss to evaluate the significance of each feature, providing a more dynamic insight into feature relevance.

Local and Global Interpretability Techniques

- LIME (Local Interpretable Model-agnostic Explanations): LIME works by creating a surrogate model to approximate the predictions of the underlying complex model. It perturbs the input data by making slight variations and observes how those changes affect the output. This process generates a new dataset of perturbed samples and their corresponding predictions, which are then used to train a simpler, interpretable model, such as a linear regression or decision tree, which is locally faithful to the original model.

- SHAP (SHapley Additive exPlanations): SHAP values, grounded in cooperative game theory, assign each feature an importance value for a particular prediction. The unique aspect of SHAP is its consistency and efficiency in allocation, ensuring that the sum of the SHAP values equals the difference between the prediction and the average prediction over the dataset. SHAP integrates with many model types and provides a unified measure of feature importance across models.

Rule Extraction from Complex AI Models

Rule extraction techniques convert the decision patterns of AI models into a series of if-then rules, making the model’s decisions more interpretable. Decision trees, for example, inherently facilitate rule extraction through their structure. However, for neural networks, algorithms like Trepan or techniques based on genetic programming are employed to extract symbolic rules. This involves simplifying the model’s decision function to a form that can be readily understood and articulated, albeit at the potential cost of losing some nuances of the original model’s decision-making process.

Visualization of AI Decision-Making Processes

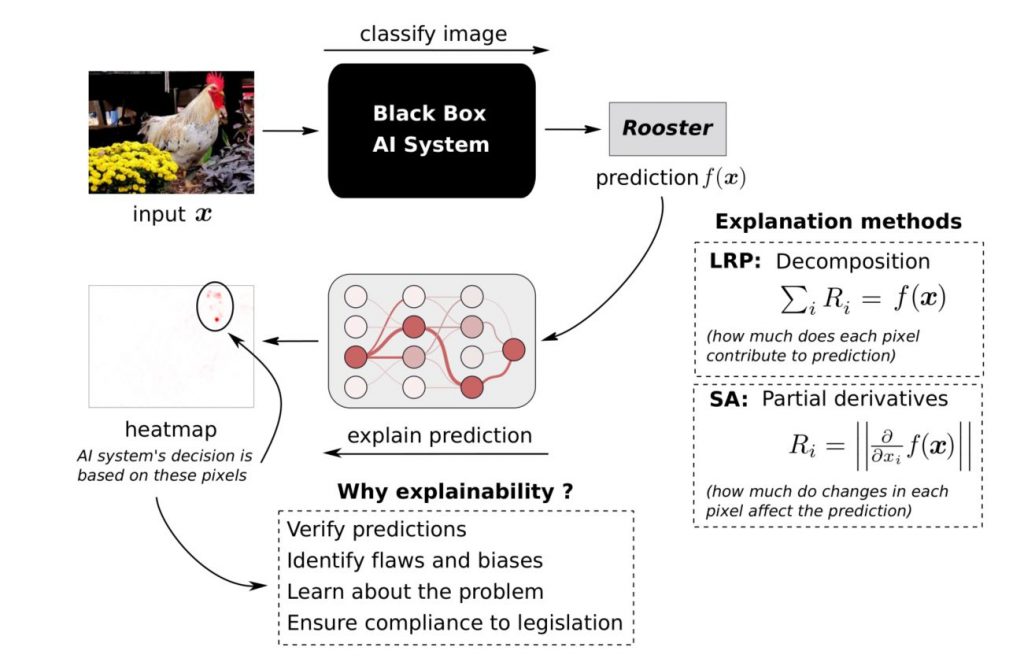

Explaining predictions of an AI system

Visualizing AI decision-making encompasses several methods, each suited to different aspects of the model:

- Decision Trees: These can be visualized directly, showcasing the decision paths from root to leaf for classification or regression outcomes.

- Heat Maps: In deep learning, heat maps can highlight parts of the input (like pixels in image processing) that significantly impact the model’s output, offering insights into feature relevance.

- Dimensionality Reduction: Techniques like PCA (Principal Component Analysis) or t-SNE (t-Distributed Stochastic Neighbor Embedding) are used to reduce high-dimensional data to two or three dimensions for visualization, helping to uncover the data’s underlying structure and how it influences model decisions.

Each technique contributes to peeling back the layers of AI’s complexity, providing users and developers with the means to understand, trust, and effectively use AI systems. For those looking to implement these techniques, libraries like scikit-learn for Python offer robust tools for feature importance, while frameworks like SHAP and LIME provide specialized functions for local explanations. Visualization can be achieved using tools such as Matplotlib, Seaborn, or Plotly for Python, facilitating the creation of interpretable and insightful graphical representations of AI decision-making.

Implementing Explainable AI

Implementing XAI can vary greatly depending on the application and industry. For instance, in healthcare, a predictive model for patient diagnosis can employ SHAP values to explain which clinical variables contribute most to a diagnosis. In finance, LIME could be used to elucidate credit scoring algorithms, clarifying why a loan application was approved or denied.

The Role of Libraries and Tools in Facilitating XAI

Several libraries and tools have been developed to facilitate the implementation of XAI:

- LIME and SHAP: Both libraries offer Python-based implementations for generating explanations for the predictions of any classifier or regressor, regardless of its complexity. They are instrumental in breaking down the prediction into understandable contributions from each input feature.

- Alibi: This library provides algorithms for explaining model predictions and understanding model behavior, including methods like Counterfactual Explanations and Anchor Explanations, which can be particularly useful for complex decision-making scenarios.

- AI Fairness 360 (AIF360): Developed by IBM, this tool is designed to help identify and mitigate bias in machine learning models, ensuring that the models’ decisions are fair and equitable across different groups.

Case Studies on the Implementation of XAI

How XAI Affects Different Stakeholders

Healthcare: A notable example is the use of XAI in diagnosing diabetic retinopathy. Researchers used deep learning models to analyze retinal images, with tools like Grad-CAM providing visual explanations by highlighting the areas of the retina that influenced the model’s diagnosis the most.

Finance: Banks and financial institutions use XAI to explain credit decisions. By implementing models with built-in explanation capabilities, they can provide customers with reasons for a loan’s approval or rejection, based on factors such as credit history, income, and debts, thereby making the decision-making process transparent and understandable.

Manufacturing: Companies use AI to identify potential equipment failures before they occur. For example, a manufacturer might use a neural network to predict machine breakdowns, employing XAI techniques to highlight the specific sensor readings or conditions leading to a predicted failure, allowing for targeted preventative measures.

Implementing XAI not only aids in making AI systems more transparent and their decisions more understandable but also helps in building trust among users and stakeholders.

Advantages of Explainable AI

Step-by-step approach to the application of XAI

The push towards Explainable AI (XAI) is driven by its numerous advantages, impacting everything from user trust to regulatory compliance.

Improved Understanding and Transparency

XAI significantly enhances the clarity with which AI systems operate, making the inner workings of complex models accessible and understandable. This improved understanding is vital for sensitive applications like healthcare, where knowing the “why” behind a diagnosis or treatment recommendation can be as crucial as the decision itself.

Facilitating Faster Adoption of AI Technologies

The transparency and trust established by XAI pave the way for faster adoption of AI across various sectors. By demystifying AI operations, organizations can alleviate concerns about the “black box” nature of AI systems, encouraging broader acceptance and use.

Debugging and Optimization Benefits

XAI offers practical benefits in debugging and optimizing AI models. By understanding how an AI system arrives at its decisions, developers can identify and correct errors in the model’s logic or data handling. This capability is crucial for improving model accuracy and performance.

Regulatory Compliance and Ethical Considerations

Regulatory compliance is a growing concern as AI becomes more prevalent in decision-making processes. XAI helps ensure that AI systems adhere to legal and ethical standards, providing the necessary documentation and rationale for decisions to satisfy regulatory requirements.

Challenges and Limitations of XAI

Major XAI milestones since 1983

Despite the significant advancements and benefits associated with Explainable AI (XAI), several challenges and limitations persist in its implementation and effectiveness.

Complexity and Inherent Limitations of Certain AI Models

- Deep Neural Networks (DNNs): The complexity of DNNs arises from their layers and the non-linear transformations between these layers. The sheer volume of parameters in a DNN, often in the millions, complicates the traceability of decisions. Techniques like Layer-wise Relevance Propagation (LRP) attempt to address this by backtracking the contribution of each input feature through the network layers, yet the granularity and interpretability of such explanations remain challenging.

Balancing Performance with Explainability

- Trade-off Metrics: Quantifying the trade-off between model performance (e.g., accuracy, precision, recall) and explainability involves developing metrics that can evaluate explainability in a meaningful way. Current research explores creating composite metrics that can guide the development of models that do not compromise significantly on either front. However, the subjective nature of what constitutes a “good” explanation complicates these efforts.

Addressing the Black-Box Nature of Some Algorithms

- Model Agnostic Methods: To tackle the black-box nature, model agnostic methods like Partial Dependence Plots (PDP) and Accumulated Local Effects (ALE) plots provide insights into the model’s behavior without needing to access its internal workings. These methods, however, can be computationally intensive and may not offer the specific, instance-level explanations needed for full transparency.

Contextual Understanding in Complex Systems

- Incorporating Domain Knowledge: Enhancing contextual understanding requires integrating domain knowledge into the AI system, a process known as knowledge infusion. This can be achieved through techniques like ontological engineering or embedding expert systems within AI models. The challenge lies in accurately capturing and encoding domain knowledge in a way that the AI system can utilize without oversimplifying the complexities of the real world.

In addressing these challenges, advancements in AI interpretability methods, such as neural-symbolic integration, which aims to combine the learning capabilities of neural networks with the interpretability of symbolic AI, are promising. Additionally, the development of benchmarks for explainability and the standardization of explanation formats could help in evaluating and improving XAI solutions.

Conclusion

Explainable AI (XAI) stands at the intersection of technology and ethics, driving forward AI’s potential while ensuring it adheres to transparency, fairness, and privacy. It is instrumental in demystifying AI’s “black box” by providing clear insights into decision-making processes, thus mitigating biases, and enhancing fairness across applications.

As AI technologies become increasingly embedded in our daily lives, the role of XAI in mediating the relationship between AI systems and their human users will be paramount, guiding the responsible deployment of AI solutions across industries.

The path towards achieving fully explainable AI encapsulates a broader commitment to ethical technology development, balancing AI’s transformative potential with the necessity for accountability.